The SSIS Catalog, first introduced with the release of SQL Server 2012 Integration Services (SSIS 2012), is a big step forward in Data Integration Lifecycle Management (DILM) with SQL Server Integration Services (SSIS). Like all software, the SSIS Catalog performs some tasks admirably and other tasks… not so admirably. In this post, I will share some thoughts on SSIS Catalog logging.

The Good

Awesomeness…

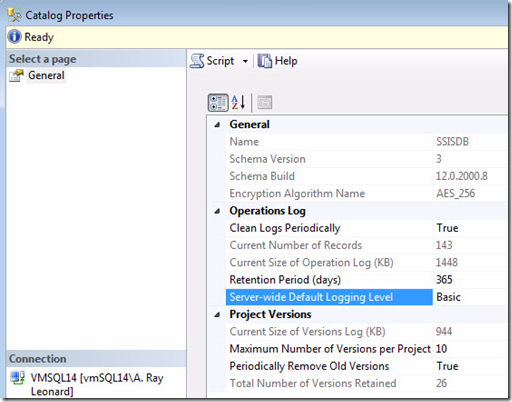

My favorite SSIS Catalog feature is the integrated logging. It Just Works. I configure a default Logging Level when I create an SSIS Catalog (or later) and I don’t have to think about logging unless or until I want to change the Logging Level for some reason. And I can make that change on a per-package-execution basis, even. SSIS Catalog logging is fast and requires little or no work on my part to maintain. Other logging defaults configured at the time of the Catalog creation (or later) include the Retention Period and Clean Logs Periodically properties:

I can run my data integration enterprise with these defaults. And I sometimes do.

The OK

Time-Deterministic Logging

The SSIS Catalog will log some events in an order that is different from the order in which the events occurred in real time. In my experience, it’s possible to see events that occur within a few milliseconds logged out of order. I’ve explained this to a few auditors and, to date, none have disqualified a system because of this behavior.

The Bad

Log Maintenance

The way the SSIS Catalog cleans these records (in 2012 and 2014, at least) can be troublesome. Short version: the maintenance process executes daily (by default) and relies on cascading deletes that span a few levels in the logging hierarchy. I won’t bore you with the remainder of the technical details. I will just tell you that if you have lots of execution and event metadata stored in the SSIS Catalog log tables for a particular day, the log cleaning process can take a long time and consume lots of server resources.

Reports

This is potentially Ugly, but it is at least Bad. In order for someone to view the SSIS Catalog reports that ship with the solution (in SSIS 2012 and 2014), the following must be true:

-

The user must have SQL Server Management Studio (SSMS) installed.

-

The user must be a member of the ssis_admin or sysadmin roles (…in 2012 and 2014. Starting in 2016 there’s a new role named “ssis_logreader” that does what it says.).

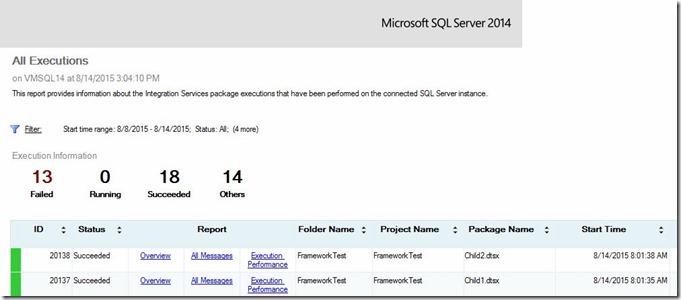

The reports are excellent:

I have no issue with Database Administrators (DBAs), Database Developers, or Data Integration Developers using SSMS and the SSIS Catalog reports. I worry some about Operations people and project stakeholders needing SSMS and elevated permissions just so they can access operational or historical enterprise data integration information. Am I saying I do not want Operations people and project stakeholders to see the data contained in the SSIS Catalog reports? No, I most certainly am not. My concern is that someone will accidentally delete a mission-critical SSIS package from a Production SSIS Catalog. (Note: DBAs, Database Developers, and Data Integration Developers are just as at risk of accidentally deleting something as Operations people or project stakeholders.)

You see, if you are a member of either the ssis_admin or sysadmin role, you have permission to delete SSIS packages. You also have permission to do a whole lot of other things that, frankly, you shouldn’t need to do.

The Ugly

The defaults for SSIS Catalog logging properties are shown in the screenshot above. The default logging level is Basic. I like that setting. I also like cleaning the logs periodically.

I cringe a little at the default retention period. Why? Experience. I’ve been an enterprise data integration architect for years. Doing that job will teach you to think about stuff normal people don’t consider. Here’s why I cringe at a 365-day default retention period: What happens 1 Jan? Most enterprises run mission-critical, regulation-required, end-of-year reports on or around 1 Jan. These processes may only execute once per year (but they’re tested each time a change is deployed, right? Don’t get me started…). They can be big and complex processes and they usually execute across a larger domain of data than nightly, weekly, monthly, or quarterly processing.

What does that mean for the SSIS Catalog logs? More messages!

Wait, it gets worse.

Where are your support people on or around 1 Jan? Sitting at their desks watching these processes run to completion? Gosh, I hope not. Let’s say you manage to make it through that first SSIS-Catalog-managed year-end processing cycle without calling in too many people. Good. Fast-forward one year – or 365 days, as the Logging Retention Period property of the SSIS Catalog likes to call it. The default logging retention period also kicks in on or around 1 Jan (you think you have a 25% chance of hitting a leap year, but Murphy is way better at statistics than you or me…), right about the time your extra long data integration processing is trying to run. That smell coming from your server? That’s smoke.

Recommendations

Logging

I can hear you thinking, “Great. Now what, Andy?” I’m glad you asked! Ask yourself, “Self, do we really need a year of historical enterprise data integration logging?”

If the answer is “Yes, we need at least a year of log data,” then I recommend you increase the Retention Period (days) property to some number greater than 365 – maybe 380, provided fifteen days will get your enterprise beyond the year-end process. The goal is to have the log maintenance from last year’s year-end processing occur sometime after this year’s year-end processing.

If the answer is “No, we don’t need a year a log data,” I recommend you set the Retention Period (days) property to the number of days you need to retain.

Reports

If you’re reading this and you have an SSIS Catalog in your enterprise, then you also have SQL Server Reporting Services (SSRS). You can write your own reports to execute against the logs and data integration metadata stored in the SSISDB database. SSRS reports can be deployed to run in a web browser. That makes them read-only from a user perspective.

To be fair, the SSIS Catalog reports built into SSMS are also read-only. But SSMS is anything but read-only. Good DBAs will read this and (rightfully) think, “Well, enterprise security should define who has permissions to read and write and make changes to data in SSMS.” I concur, and that’s almost correct. The SSIS Catalog node in the SSMS Object Browser is different. Permissions checks are actually hard-coded into some of the SSIS Catalog Views, even. In order to view the reports, they must be a member of either ssis_admin or sysadmin.

To also be fair, the user still must be a member of either ssis_admin or sysadmin to view data via SSIS Catalog Views from an SSRS solution deployed so that it runs in a web browser. Two thoughts:

-

It’s a web browser and the reports are (hopefully) only executing SELECT statements.

-

If you design your own reporting solution in SSRS, you can define how you access the data. You do not need to use the SSIS Catalog-supplied views with their hard-coded role checks, you can write your own views or stored procedures.

I and others have built custom SSRS reporting solutions that read the same SSIS Catalog execution logs and metadata. At Enterprise Data & Analytics, we are here to help™. Check out the Catalog Reports (free and open source!) project at DILM Suite.

Conclusion

The SSIS Catalog contains a great set of Data Integration Lifecycle Management tools, reports, and functionality. The logging and reporting is very good, but there are things of which you should be aware.

:{>

I am here to help:

Contact Andy!

Learn more:

SSIS Design Patterns training in London, UK, 7-10 Sep 2015

Advanced SSIS Training with Andy Leonard & Tim Mitchell in Reston Virginia Dec 7 – 10, 2015

DevOps and Data Integration with SSIS (at IT/Dev Connections in Las Vegas, NV 15 Sep 2015)

Designing an SSIS Framework (at IT/Dev Connections in Las Vegas, NV 16 Sep 2015)

Using Biml to Automate SSIS Design Patterns (at the PASS Summit 2015 in Seattle, WA 27-30 Oct 2015)

Hacking the SSIS Catalog (at the PASS Summit 2015 in Seattle, WA 27-30 Oct 2015)

Stairway to Integration Services

Linchpin People Blog: SSIS

Stairway to Biml

Just a comment that there are some really good reporting options for SSIS that are already out there – you don’t have to start from scratch. Specifically, SSIS Reporting Pack out on Codeplex by Jamie Thompson is a really excellent project.

I know you know my thoughts on how logging is handled with SSIS. I really don’t like the cascading deletes but I thought it would be helpful to mention that SP2 did include a fix to help with performance of purging SSISDB.

https://support.microsoft.com/en-us/kb/2972285

Agreed on that one, John. The cascading deletes are a pain if you don’t catch them before that first purge and you haven’t created the proper indexes. I was glad to see that they’re finally fixed if you have 2012SP2 or later.

I’ve used SSIS Reporting Pack before and plan to use it again. I’ve also appreciated Pragmatic Works’ tool enough to be willing to buy it in the past. Their logging and ability to "see" the package execute in near-real-time is pretty cool and helpful for minimal fuss. Yes, it costs money, but the cost was sufficiently low to make it worthwhile. Of course, new gig we’re looking at implementing some standards, frameworks, and integrated logging on our own before trying something else.

While I do agree that project deployment model is very good, I do not think that the SSISDB is well designed.

Our WH ETL has to get data from 60 different systems, and we have 7 different system types. The most common type has 30 systems. I have written a control system that controls the pre audit, stage, load and post audit, as each system comes available to load (after midnight on each system depending on their time zone).

We recently upgraded to project deployment model and immediately started having performance issues. The load set was takings over 1.5 hours longer. The load consists of about 2300 package executions in total.

We ended up having to turn logging off, as it was slowing everything down. We now use some logging in the control system to determine if the package failed on last execute for that system, then just log for any subsequent executions to get the error message.

We used to execute a control package, which would check if there was anything to do. Now we execute some sql, which does the check, and only if there is any work to do does it start the control ssis package.

We also saw large gaps between the start of execute of a package, and when the package actually started to run using the catalog execute time, and the first message from basic logging. This could be as high as 8 – 10 seconds. We haven’t resolved this as I have no idea how to sort this out.

We also saw a large increase in some package executions, that were doing a lot of partial cache lookups. I tried to optimize these by doing more full cache lookups, but that also made the packages more complex as I can’t pre load all data. I don’t know why these partial cache lookups are so much slower since the upgrade to project model.

Hey Michael S,

Have you tried to set the delay validation to true and see if they speeds up the package execution?

In the past I had SQL servers that were geographically far away from the SSIS server, and due to the latency it would cause the ssis package to take a lot longer to complete all the validation within the package.

I was confident that the source tables wouldn’t change over time. And to be honest if something did change it is going to fail on the validation or the execution. So by having one less step meant the execution of the ssis packages started immediately.

I hope that helps.

Gilbert

Hey Michael S,

Have you tried to set the delay validation to true and see if they speeds up the package execution?

In the past I had SQL servers that were geographically far away from the SSIS server, and due to the latency it would cause the ssis package to take a lot longer to complete all the validation within the package.

I was confident that the source tables wouldn’t change over time. And to be honest if something did change it is going to fail on the validation or the execution. So by having one less step meant the execution of the ssis packages started immediately.

I hope that helps.

Gilbert

Hi Andy,

Interesting article but I don’t fully agree with the explanation of the removal of outdated log records. While I do agree that 365 days as retention period is way too much in most cases, I do not think there’s a huge issue at Jan 1. In your explanation it seems that the logs will only be cleaned once per year while this job is actually running on a daily basis by default. So on Jan 1, only the records of Jan 1 from the year before would be deleted.

Best regards,

Valentino.

Hi Valentino,

You are correct. I added language to clarify.

Thank you,

Andy