Thanks to the hard work of the Microsoft Fabric Data Factory Team, it’s now possible to mount an Azure Data Factory in Fabric Data Factory. This post describes one way to mount an existing Azure Data Factory in Fabric Data Factory. In this post, we will:

- Mount an existing Azure Data Factory in Fabric Data Factory

- Open the Azure Data Factory in Fabric Data Factory

- Test-execute two ADF pipelines

- Modify and publish an ADF pipeline

Mount an existing Azure Data Factory in Fabric Data Factory

To tinker with this cool new functionality, first connect to Fabric Data Factory. Once connected, click the “Azure Data Factory (preview) tile:

When the “Mount Azure Data Factory” blade displays,

- Select the Azure Data Factory you wish to mount in Fabric Data Factory

- Click the “OK” button:

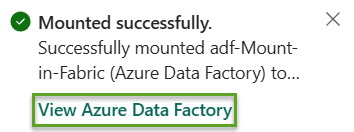

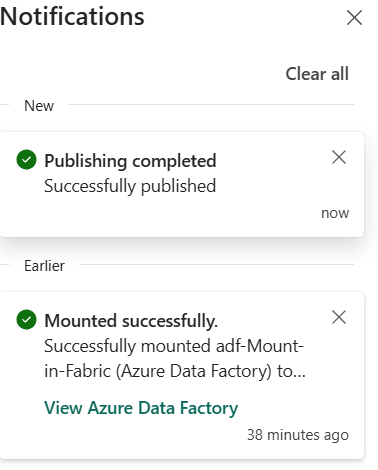

Once the Azure Data Factory is mounted, a notification similar to that shown below is displayed. Click the “View Azure Data Factory” link to proceed:

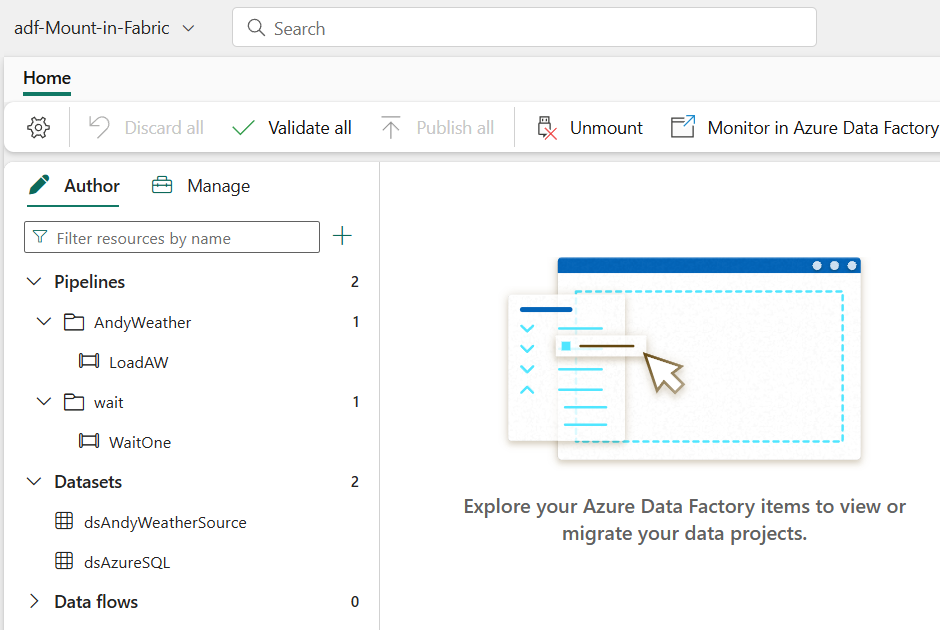

Open the Azure Data Factory in Fabric Data Factory

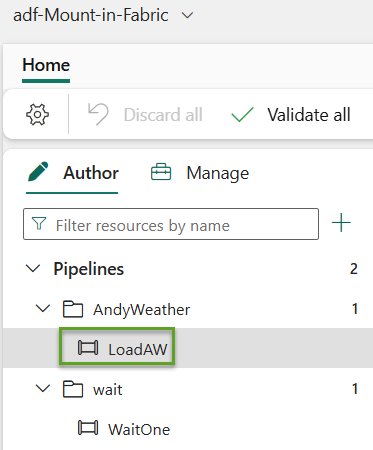

The Azure Data Factory displays:

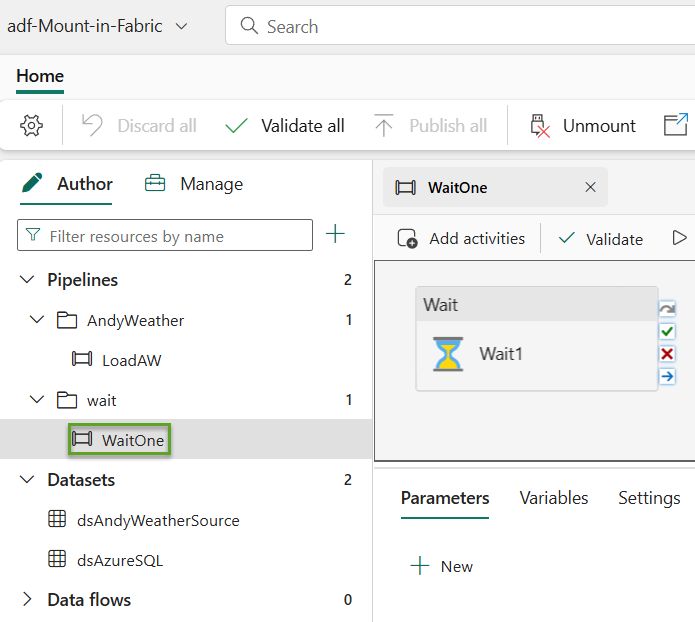

Click an ADF pipeline to open it. The pipeline I select to open is named “WaitOne.” WaitOne is a very simple ADF pipeline that contains only a Wait activity configured to wait the default (1) second:

Test-execute Two ADF Pipelines

My Azure Data Factory contains two pipelines:

- WaitOne – which contains a single Wait activity configured to wait the default (1) second

- LoadAW – which reads data from a CSV file stored in an Azure Blob Storage container via SAS URI, and sinks that data in an Azure SQL Database table via SQL authentication

You can learn more about the applications that support the AndyWeather application by reading my post titled “AndyWeather Internet of Things (IoT)“.

Click the “Debug” button to test-execute the ADF pipeline named “WaitOne”:

If all goes as expected, the pipeline test-execution succeeds:

Open a different ADF pipeline. In this case, I open a pipeline named “LoadAW.” LoadAW is designed to read weather data collected from my personal weather station – AndyWeather – stored a CSV file stored in an Azure Blob Storage container and write the contents to an Azure SQL Database sink. This test execution of the LoadAW pipeline tests connectivity to Blob storage, which uses a Shared Access Signature URI configured at the container scope, and connectivity to the Azure SQL Database, which uses SQL authentication:

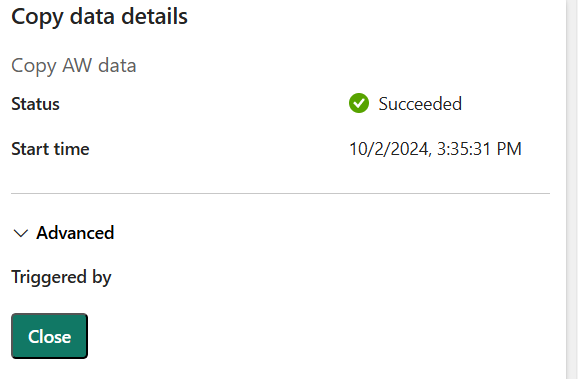

As before, click the “Debug” button to text-execute the second pipeline. If all goes as expected, the pipeline execution succeeds. Click the “Copy data details” to examine the results (at the time of this writing):

The “Copy data details” blade does not (yet) display the same information shown in Azure Data Factory, but I am confident it will very soon:

Modify and Publish an ADF Pipeline

Test ADF pipeline edit functionality by making a small change to the Copy data activity:

After the edit, click the “Publish all” button to test ADF publishing functionality. The “Publish all” blade displays. Click the “Publish” button to publish the ADF pipeline from within Fabric Data Factory:

If all goes as expected, publication succeeds:

Conclusion

While not all functionality for managing ADF pipelines is (currently) available in Fabric Data Factory, an impressive amount of functionality is present. Thank you and kudos to the Fabric and Azure Data Factory teams for this huge step forward!

:{>

Hi Andy , Can you tell me how did you get the list of the ADF pipeline on the Fabric Canvas ?

Hi Sachin,

Thank you for reading my blog and for asking a great question!

Once you mount an Azure Data Factory in Fabric Data Factory, the ADF pipelines are available in the Fabric Data Factory experience. Does that answer your question?

Thank you,

Andy

Hi Andy,

Just want to know how the adf pipelines will run in fabric. means In ADF we have integrationRuntime and selfhostedIR. what is the back round in the fabric to run these adf pipelines to fabrics.

Hi Amith,

Thank you for reading my post and for your excellent question. I don’t know the answer to that question, but I am investigating today and tomorrow before delivering A Day of Azure Data Factory on Wednesday because I intend to demonstrate mounting ADF in Fabric Data Factory as part of that training!

:{>