Do you need to use SSIS to load some data from a large source? This post covers some thoughts on a couple/three patterns you may apply to help. I can hear you thinking, “Which pattern should I use, Andy?” That’s an excellent question! The answer is, “It depends.” You should test each pattern and see which performs best for you.

Pattern 0: Just Do It

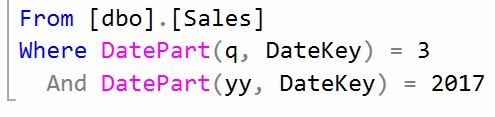

The first pattern is easy. Select the rows you need in an SSIS Data Flow Task OLE DB Source adapter. You can build a query in SSMS (like I did here):

Is there anything wrong with this query? Well, maybe. Functions – like DatePart() – in the WHERE clause can slow things down a bit. It’s a valid pattern, though, so you should use it in your SSIS Data Flow Task’s OLE DB Source adapter to test performance.

Pattern 1: Stage Select Rows Using a Data Flow

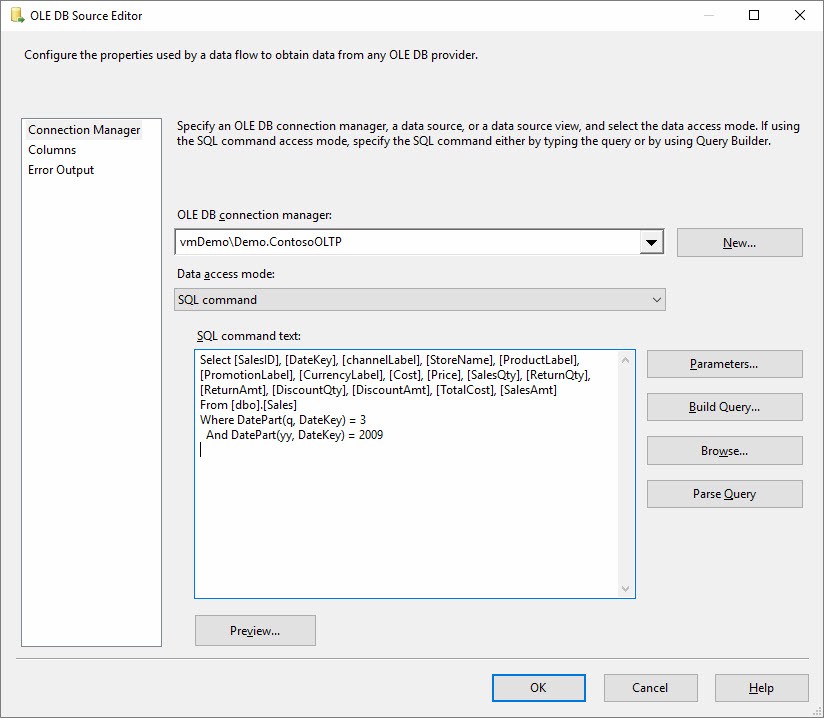

Another option is to stage the subset of rows you’re after – or just a list of uniquely-identifying columns from those rows – also known as a business key or natural key. You could create a Data Flow Task that selects the rows you wish to load, similar to that shown here:

Those rows could be loaded to a staging table and later read from that table.

Reading all the columns – as shown above – is really just adding a step that you don’t need if your goal is to load data straight from a source to a destination. If, however, you need to join to other tables or lookup reference data, loading more than just the business keys into a staging table may be the way to go.

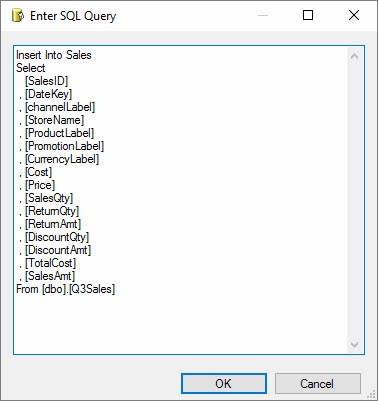

Pattern 2: Load Staged Rows Using T-SQL

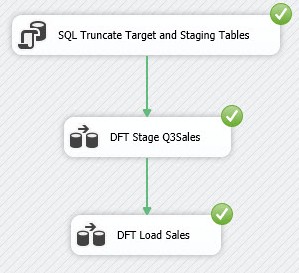

A third option is to use T-SQL to load the target table after you’re loaded a stage table via Data Flow Task. Configure the T-SQL query similar to that shown here:

Your Control Flow now appears as shown:

Pattern 3: Stage and Load Rows Using T-SQL and Execute SQL Tasks

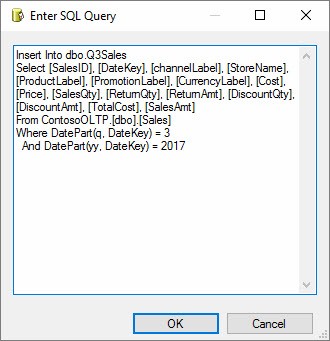

Another option is to stage the subset of rows you’re after using an Execute SQL Task and then use a second Execute SQL Task to load those rows into the target table. Your stage query would appear as shown here:

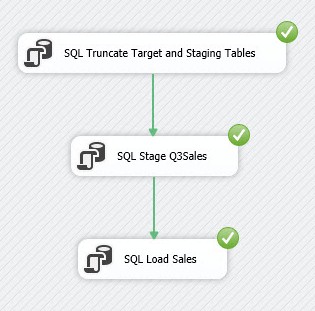

The Control flow would now contain an Execute SQL Task for Staging and another for Loading:

Pattern 4: Skipping the Stage Step Altogether

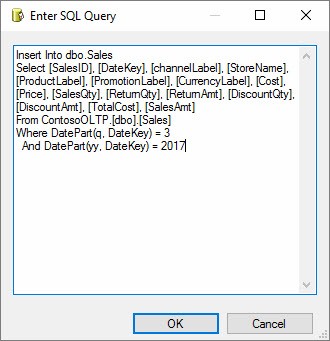

If you’re just doing a simple single-table load (like in this demo), and if the source and destination are on the same instance, you can often achieve great performance using T-SQL to load from the source directly to the destination:

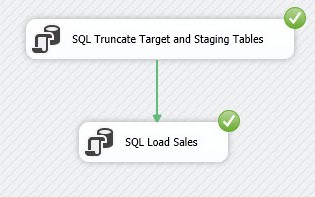

Your Control Flow becomes remarkably simple, as shown here:

Conclusion

Staging a subset of the data you need, or even unique keys for a subset of the desired data, is one way to improve load performance.

:{>