In my experience, people either love or hate triggers in Transact-SQL. As a consultant, I get to see some interesting solutions involving triggers. In one case, I saw several thousand lines of T-SQL in a single trigger. My first thought was, “I bet there’s a different way to do that.” I was right. The people who’d written that trigger was unaware there was another way to solve the problem.

In my experience, people either love or hate triggers in Transact-SQL. As a consultant, I get to see some interesting solutions involving triggers. In one case, I saw several thousand lines of T-SQL in a single trigger. My first thought was, “I bet there’s a different way to do that.” I was right. The people who’d written that trigger was unaware there was another way to solve the problem.

I see that a lot.

Software and database developers do their level best to deliver the assigned task using the tools available plus their experience with the tools. I don’t see malice in bad code; I see an opportunity to mature.

Granted, when I’m called in as a consultant I get paid by the hour. That’s not the case for many folks.

One ETL-Related Use For Triggers

Change detection is a sub-science of data engineering / data integration / ETL (Extract, Transform, and Load). One way to detect changes in a source is to track the last-updated datetime for the rows loaded by an ETL load process.

The next time the load process executes, your code grabs that value from the previous execution and executes a query that returns all rows inserted into or updated in the source since that time.

Simple, right? Not so fast.

Where to Manage Last-Updated

How the value of the last-updated datetime is managed is crucial. I’ve had arguments discussions with many data professionals about the best way to manage these values. My answer? Triggers.

“Why Triggers, Andy?”

I’m glad you asked. That is an excellent question!

Imagine the following scenario: Something unforeseen occurs with the data in the database. In our example, assume it’s something small – maybe impacting a single row of data. The most cost-effective manner to manage the update?

Manual update.

How is the last-updated value managed? It could be managed in a stored procedure. It could be managed in dynamic T-SQL hard-coded inside a class library for the application. If so – and given a data professional is now manually writing an UPDATE T-SQL statement to correct the issue, there is an opportunity to forget to update the last-updated column.

But if the last-updated column is managed by a trigger, it no longer matters if the data professional performing the update remembers or forgets, the trigger will fire and set the last-updated column to the desired value.

Change detection works and all is right with the world.

Troubleshooting Triggers

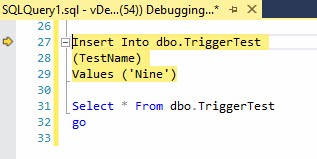

Troubleshooting triggers is… tough. There are some neat ways to troubleshoot built right into tools like SQL Server Management Studio (which is free). For example, in SSMS you can Debug T-SQL:

Conclusion

I like triggers for the things triggers do best, but triggers can definitely be misused. Choose wisely.

:{>

SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

CREATE trigger [dbo].[trgSetLastUpdatedColumn] ON [dbo].[SomeTable]

AFTER UPDATE, INSERT

AS

SET NOCOUNT ON

IF NOT UPDATE([LastUpdated])

BEGIN

UPDATE tbl

SET tbl.[LastUpdated]=getDate()

FROM dbo.SomeTable tbl

INNER JOIN INSERTED I ON I.<=tbl.<

END

GO

ALTER TABLE [dbo].[SomeTable] ENABLE TRIGGER [trgSetLastUpdatedColumn]

GO

Better. Thanks Dallas!

:{>

The update function doesn’t tell you of the value in the column has changed, just that the column was included in the SET clause of the update. So setting the column to the existing value will cause this trigger to not update the last modified date. You need to choose for that case as well.

Totally agree Andy…however in my experience it has been and is increasinly very rare I am allowed to modify a source table to add a modified date and/or a trigger.

Given 1) fewer applications are custom and more are COTS, 2) more applications are off-prem and often SaaS….Then the opportunity to modify the source is becoming less and less likely. My newer sources are only accessible via REST or SOAP API’s – just getting accurate metadata for BIML can be challenging let alone modifying the source or even fully understanding how their change timestamps are maintained.

One final note: Never ever (times 10x) rely on the change timestamp on a source not getting set to a time prior to the actual change showing up in your query! Why you ask? Because I’m assuming you care about leaking data. Because of ACID principles in databases and its interaction with triggers…most databases do not support firing triggers on commits but rather only with DML. So what you ask? This means if the source system sets a logical transaction then does an update, sit on that update for 2 hours before the commit then….well lets just say you’ve leaked data all over the floor and your dog won’t be licking up the spill. Why? Its data time traveling. When you see the row in your query it will have time travelled from 2 hours in the past to the present because it’s timestamp will be 2 hours old due to the trigger recording the change when it happened, not when the commit allowed it to be seen (unless you’re doing dirty reads and I’m assuming you’re a clean person). This means you need to periodically (I do it in my daily runs) sweep back over older changes to pick up any missed late arriving data. It is my firm belief that many many organizations are leaking data for this very reason and not even knowing it.

Excellent points (as always), Gary!

Thank you for sharing!!

:{>