Visit the landing page for the Data Engineering Execution Orchestration Frameworks in Fabric Data Factory Series to learn more.

I must begin this post with a simple – perhaps profound – statement: Koen Verbeeck (LinkedIn | @Ko_Ver) is a talented data engineer. Here’s why I begin this post with such a statement.

If you’ve been following my series on Data Engineering Execution Orchestration Frameworks in Fabric Data Factory, you may recall I was somewhat stymied at the end of my post titled Fabric Data Factory Design Pattern – Execute a Collection of Child Pipelines from Metadata. Before I could move forward, there were a couple problems I needed to solve:

- I wanted a more robust method for executing Fabric Data Factory pipelines dynamically. In my ADF execution orchestration framework – the framework I started building 17 Jan 2025 as part of Data Engineering Fridays – I’m using the Azure ReST API to start ADF pipeline execution. I really wanted to take a similar approach to a Fabric Data Factory execution orchestration framework.

- I struggled with security. In ADF, I use the System-Assigned Managed Identity (SAMI) of the Azure Data Factory to provide a secure, non-expiring “key” for Azure Rest API management, and I wanted to use a SAMI in Fabric Data Factory for the same purpose. The only hitch in my grand plan? SAMIs are not yet available in Fabric Data Factory (at least, at the time of this writing).

Koen’s Great Idea

Koen didn’t let that stop him. He found – and blogged about – a way to start a Fabric Data Factory pipeline from Azure Data Factory!

In This Post…

We continue our journey begun in Configure Azure Security for the Fabric Data Factory REST API by configuring Azure Data Factory as an orchestrator for a Fabric Data Factory execution orchestration framework. The next steps are:

- Create a Fabric Data Factory test pipeline

- Execute the Fabric Data Factory test pipeline from an Azure Data Factory pipeline

Creating a Fabric Data Factory Test Pipeline

Let’s begin where we left off in the post titled Configure Azure Security for the Fabric Data Factory REST API.

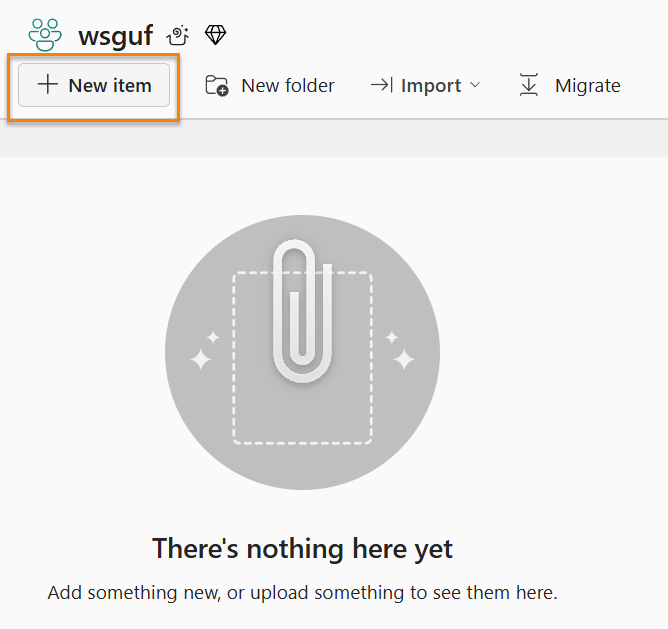

Visit the Fabric Data Factory workspace you configured to work with Azure Data Factory. Click the “+ New item” button to begin:

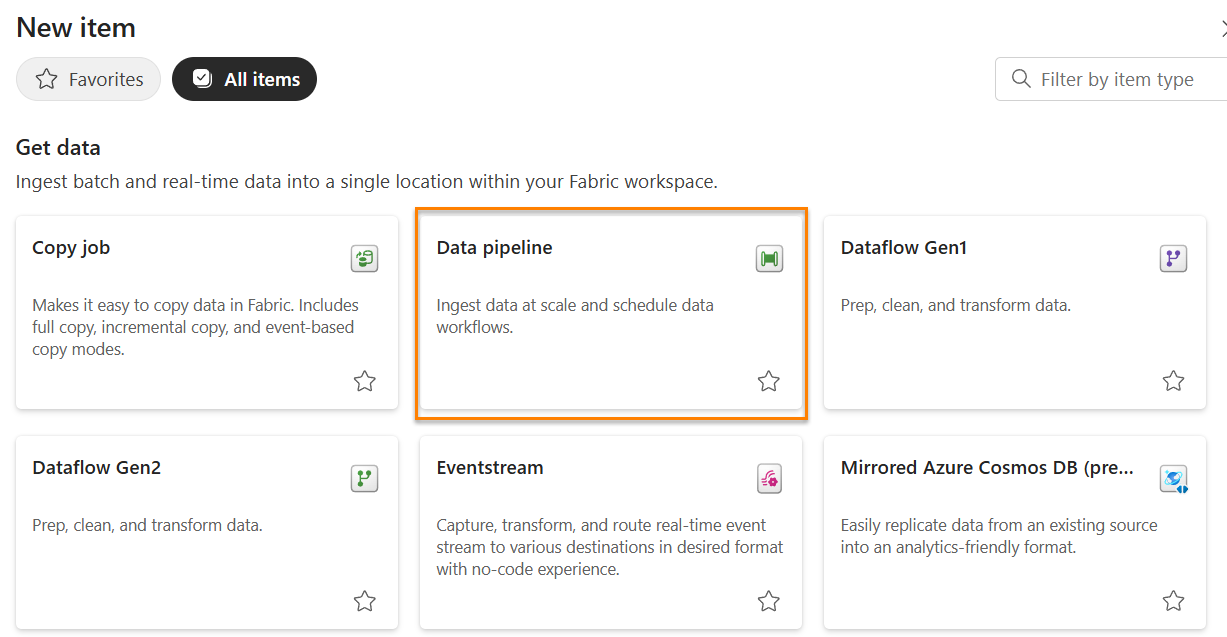

When the “New item” blade displays, click the “Data pipeline” tile:

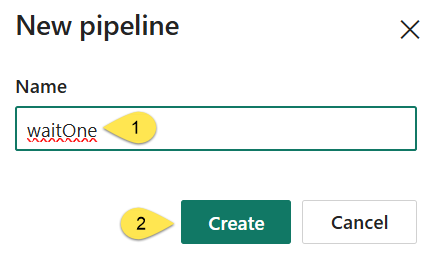

The “New pipeline” dialog displays, where you may:

- Name your pipeline “waitOne” (or “George“)

- Click the “Create” button to proceed:

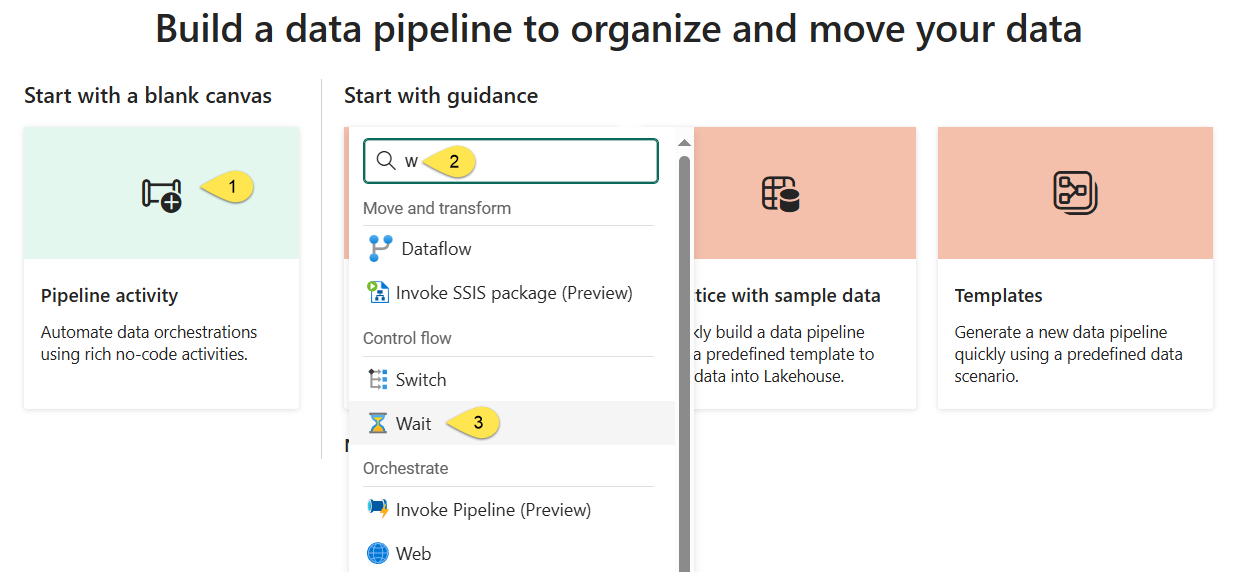

When “Build a data pipeline to organize and move your data” displays:

- Click “Start with a blank canvas

- Scroll or search for a Wait activity

- Select a Wait activity:

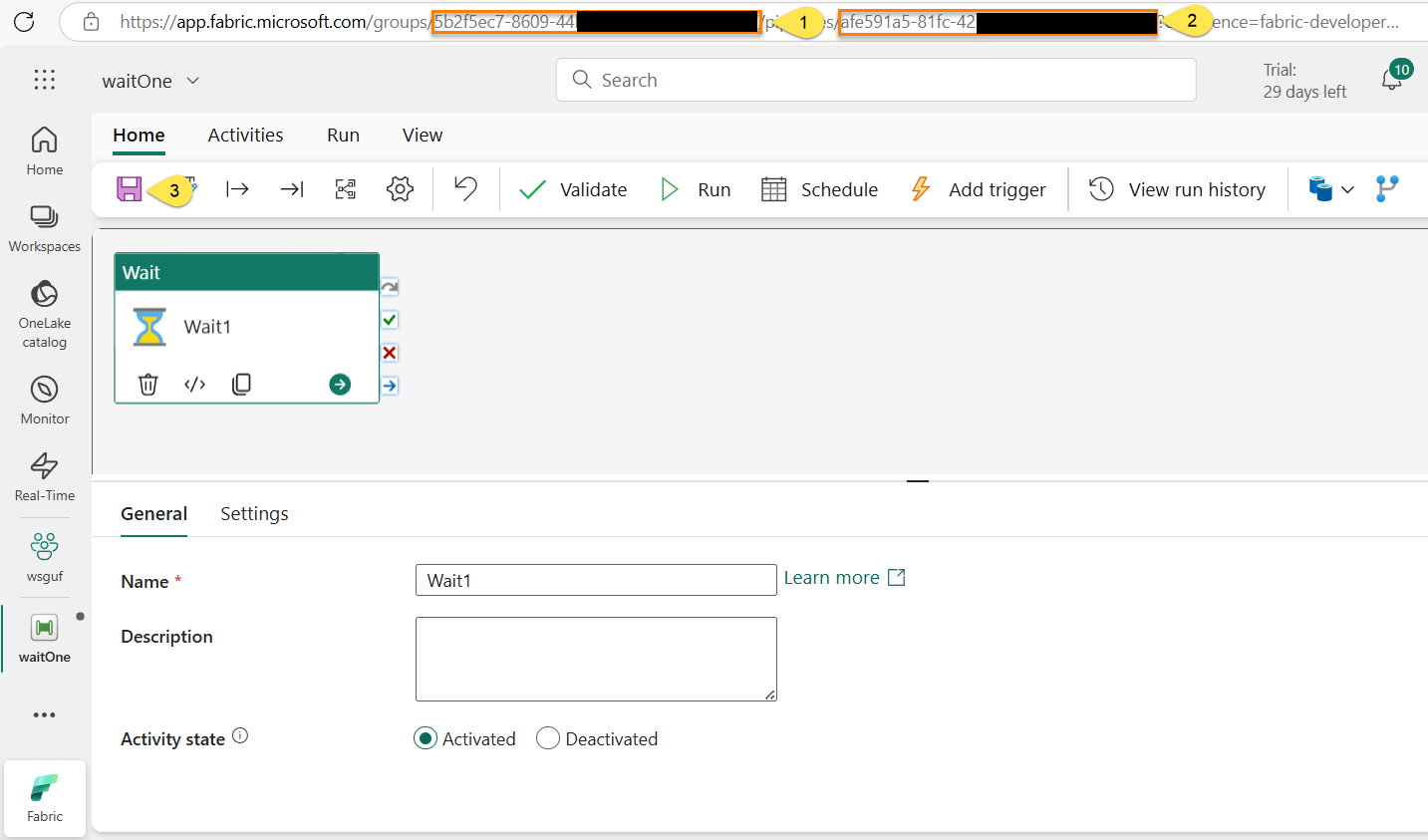

The “waitOne” pipeline is created. As Keon points out, we need:

- The workspace Id

- The pipeline Id

- Click Save to save the pipeline

Fortunately, both the workspace (1) and pipeline (2) Ids are part of the URL for the “waitOne” pipeline. Save them for later:

The Fabric Data Factory test pipeline is ready and we have the metadata we will need to start it from an Azure Data Factory pipeline!

Execute the Fabric Data Factory Test Pipeline from an Azure Data Factory Pipeline

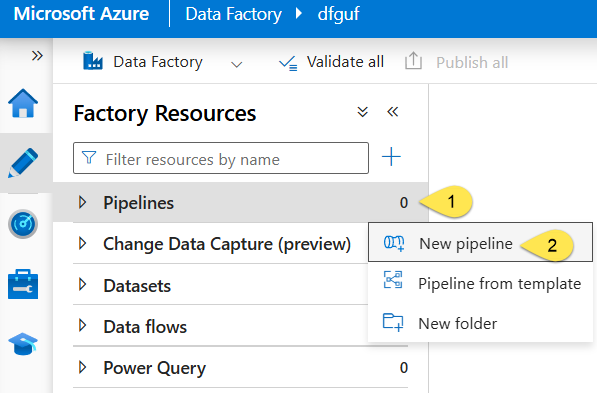

Navigate to Azure Data Factory (ADF) Studio. Click the Author page:

- Click the ellipsis to the right of “Pipelines” (not pictured)

- And then click “New pipeline”:

When the new pipeline displays, name your pipeline “execute-fabric-waitOne” (or “George“):

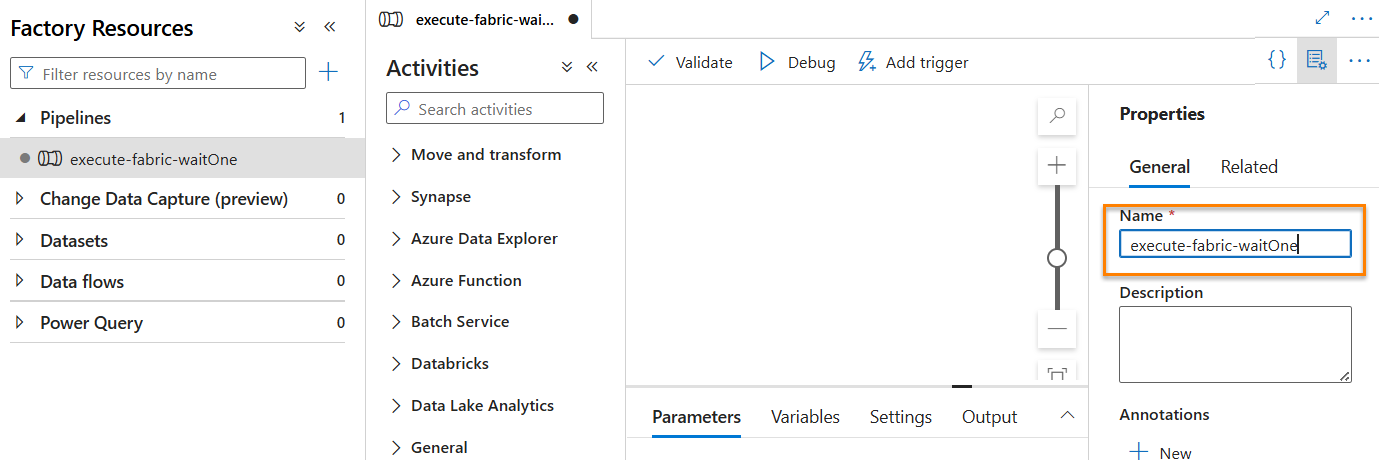

Next:

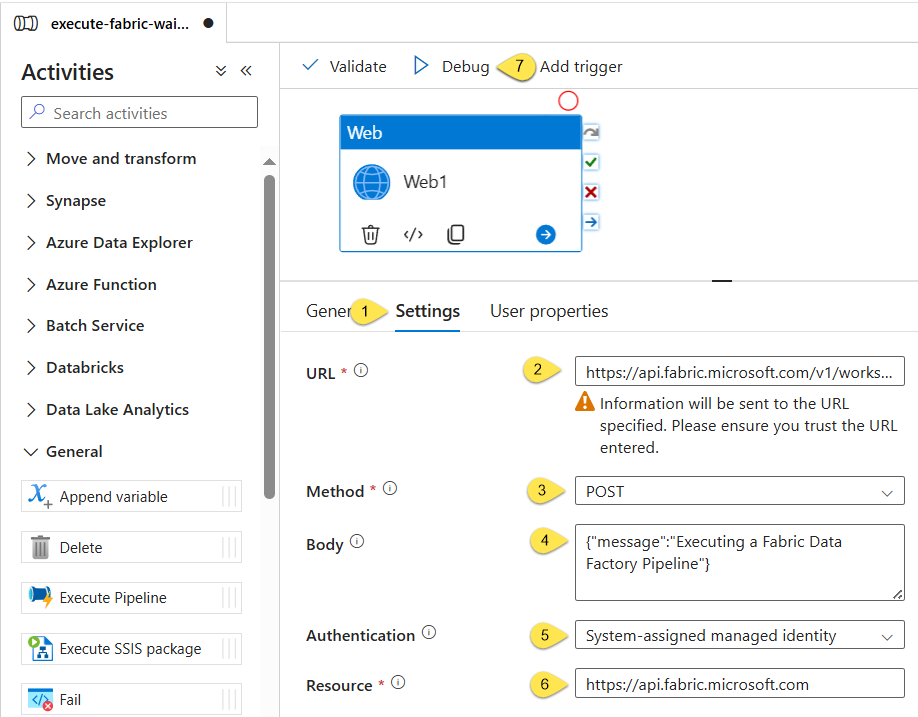

- Expand the “General” category in the “Activities” pane

- Drag a Wait activity onto the canvas:

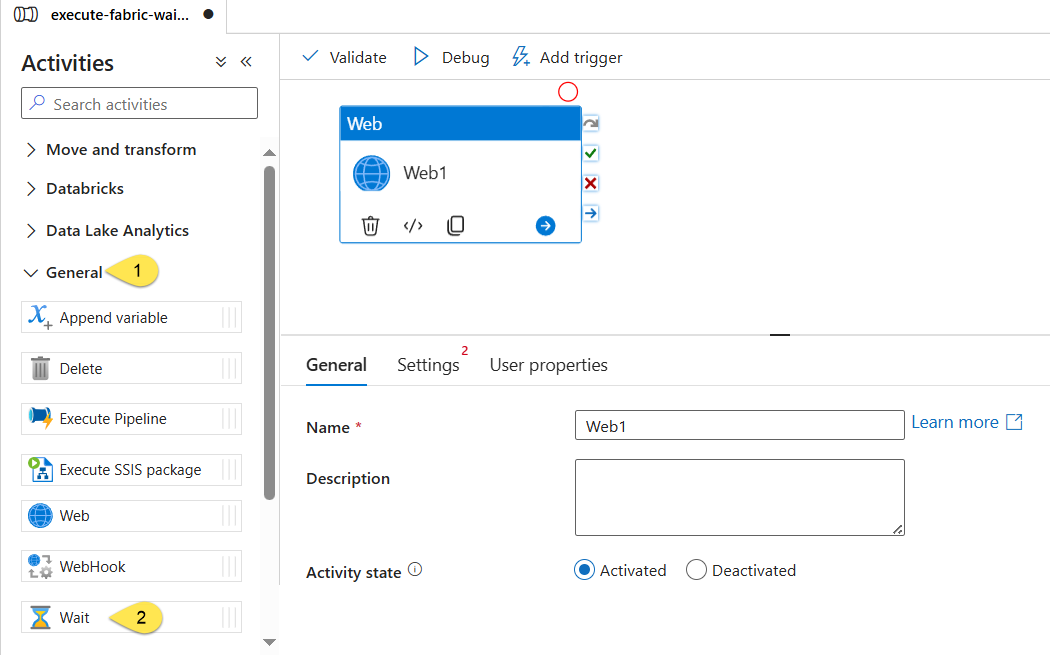

Click the Wait Activity and then:

- Click the Settings tab.

- In the URL property, enter the following URL:

https://api.fabric.microsoft.com/v1/workspaces/5b2f5ec7-8609-44nn-nnnn-nnnnnnnnnnnn/items/afe591a5-81fc-42nn-nnnn-nnnnnnnnnnnn/jobs/instances?jobType=Pipelinewhere “5b2f5ec7-8609-44nn-nnnn-nnnnnnnnnnnn” is the Fabric Workspace Id and “afe591a5-81fc-42nn-nnnn-nnnnnnnnnnnn” is the Fabric Data Factory pipeline Id (from the earlier Fabric Data Factory pipeline step). - Set Method to “POST”

- Set the Body property to some generic JSON (it is not allowed to be empty). Be sure to format the JSON correctly as

{"key":"value"} - Set Authentication to “System-assigned managed identity”

- Set Resource to “https://api.fabric.microsoft.com”

- Click the “Debug” button to test:

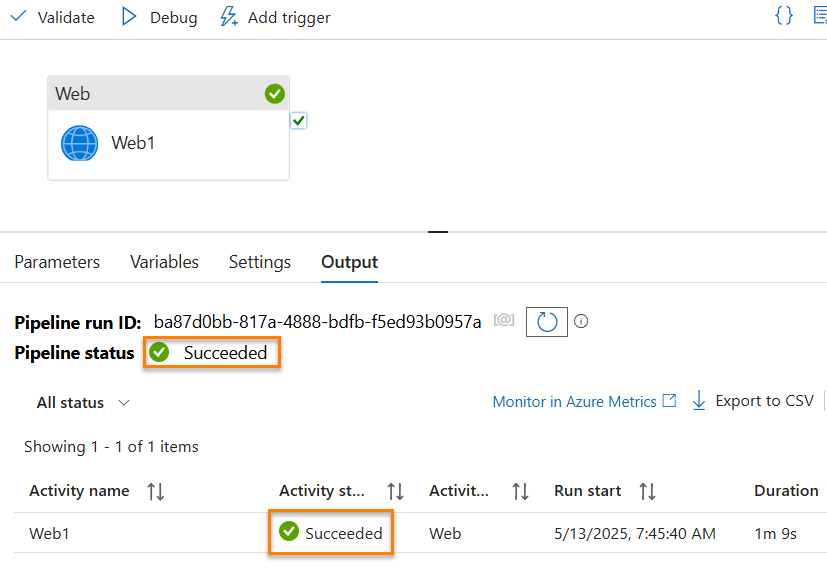

If all goes as planned, the Azure Data Factory pipeline debug execution succeeds:

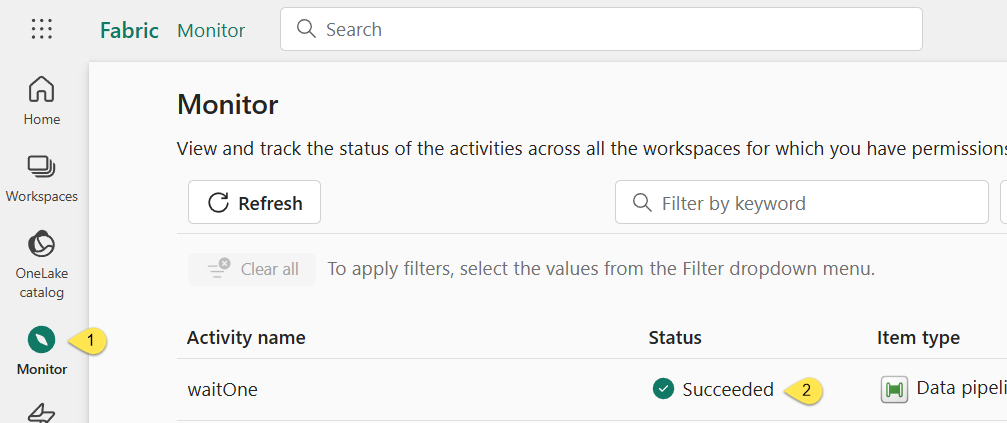

Navigate to Fabric Data Factory:

- Click the “Monitor” page

- View the execution status of the Fabric Data Factory test pipeline:

“Succeeded” is the goal!

Conclusion

Using the patterns in this post and the previous post, titled Configure Azure Security for the Fabric Data Factory REST API, it is now feasible to build a metadata-driven execution orchestration framework in Fabric Data Factory.

Thanks (again), Koen Verbeeck (LinkedIn | @Ko_Ver), for sharing this missing link!

Need Help?

Consulting & Services

Enterprise Data & Analytics delivers data engineering consulting, Data Architecture Strategy Reviews, and Data Engineering Code Reviews!. Let our experienced teams lead your enterprise data integration implementation. EDNA data engineers possess experience in Azure Data Factory, Fabric Data Factory, Snowflake, and SSIS. Contact us today!

Training

We deliver Fabric Data Factory training live and online. Subscribe to Premium Level to access all my recorded training – which includes recordings of previous Fabric Data Factory trainings – for a full year.

:{>

Comments