Blog Post Note / Disclaimer / Apology: This is a long blog post. It makes up for the length by being complex. I considered ways to shorten it and to reduce its complexity. This is the best I could do. – Andy

Visit the landing page for the Data Engineering Execution Orchestration Frameworks in Fabric Data Factory Series to learn more.

At the end of my post titled Fabric Data Factory Design Pattern – Execute a Collection of Child Pipelines from Metadata. Before I could move forward, I needed to figure out how to execute Fabric Data Factory pipelines dynamically.

I found the answer with a little help from my friends! Read Configure Azure Security for the Fabric Data Factory REST API and Fabric Data Factory Pipeline Execution From Azure Data Factory – the posts before this post in the Data Engineering Execution Orchestration Frameworks in Fabric Data Factory Series – to learn more.

I begin this post by making the executive decision to combine the efforts reached in the post titled Fabric Data Factory Design Pattern – Execute a Collection of Child Pipelines from Metadata with the discoveries outlined in Configure Azure Security for the Fabric Data Factory REST API and Fabric Data Factory Pipeline Execution From Azure Data Factory starting with configuring the wsPipelineTest Fabric workspace (from Fabric Data Factory Design Pattern – Execute a Collection of Child Pipelines from Metadata) to interact with the dfguf Azure Data Factory by configuring the security described in Configure Azure Security for the Fabric Data Factory REST API.

In this post, we:

- Configure wsPipelineTest Fabric Workspace Security

- Get Fabric Data Factory Pipeline Metadata

- Execute a Fabric Data Factory Pipeline From ADF

- Build a Basic Parent ADF Pipeline, which covers:

- How to Execute the Child Pipelines

- Edit the “Execute Fabric Pipeline” Web Activity

- Let’s Test!

Configure wsPipelineTest Fabric Workspace Security

If you don’t have a Fabric workspace named “wsPipelineTest,” you can learn more about how I built mine by visiting past posts in this series on the Data Engineering Execution Orchestration Frameworks in Fabric Data Factory Series landing page.

As described in the post Configure Azure Security for the Fabric Data Factory REST API – starting in the section titled “In Fabric, Setup a Workspace + Security” – skip the creation of a new Fabric workspace. Navigate instead to the wsPipelineTest workspace used in the Fabric Data Factory Design Pattern – Execute a Collection of Child Pipelines from Metadata (and previous) posts.

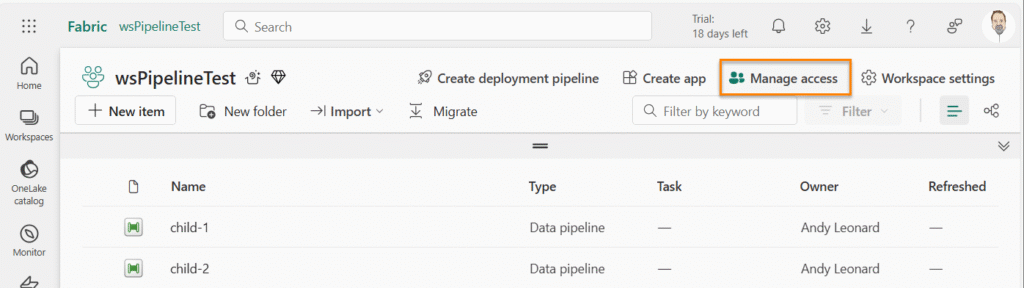

On the wsPipelineTest workspace page, click “Manage access”:

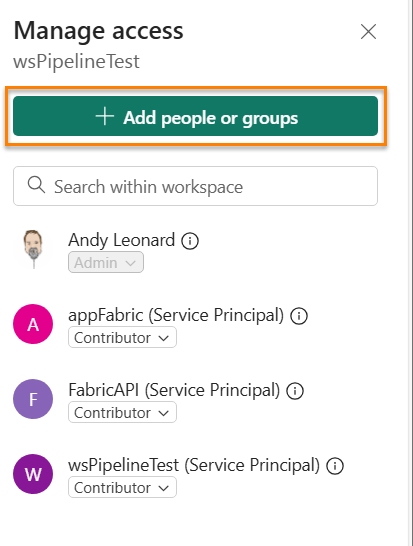

When the “Manage access” blade displays, click the “+ Add people or groups” button:

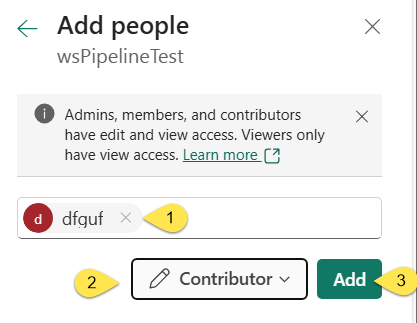

When the “Add people” blade displays

- Enter the name of the Azure Data Factory

- Change the role to “Contributor”

- Click the “Add” button:

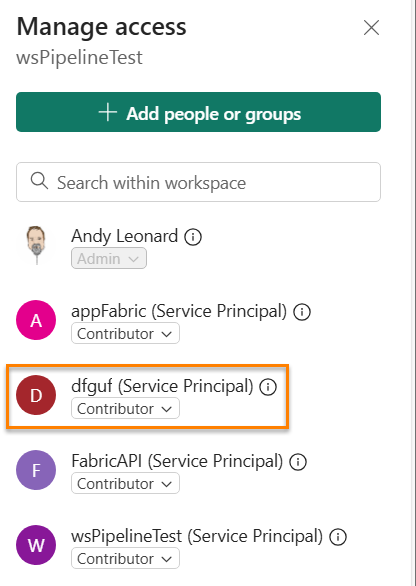

The Azure Data Factory SAMI now has Contributor access to the Fabric workspace:

Get Fabric Pipeline Metadata

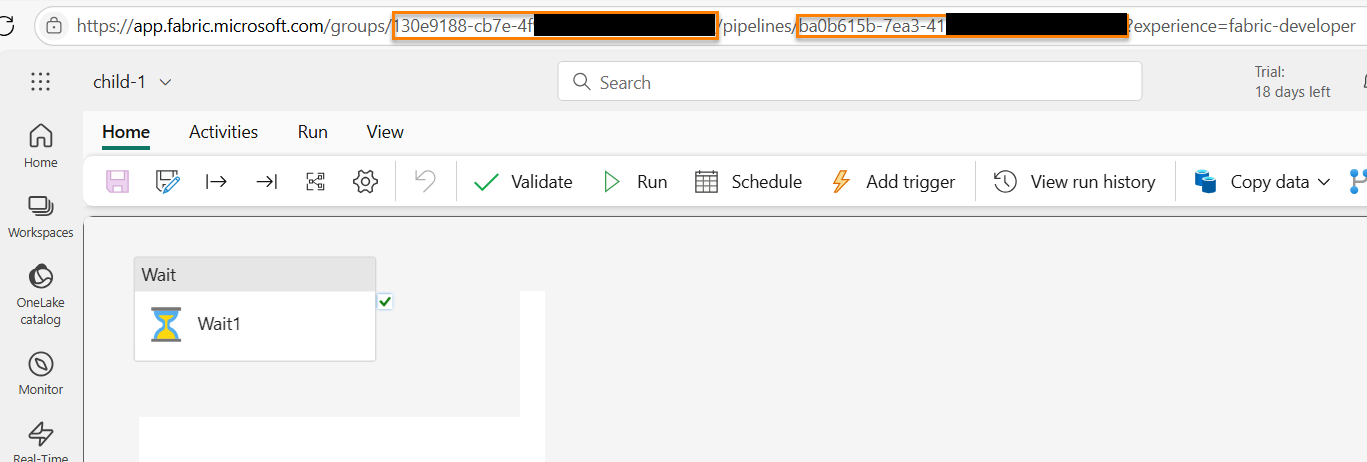

Click the pipeline named “child-1” to open it.

We need:

- The workspace Id

- The pipeline Id

Fortunately, both the workspace (1) and pipeline (2) Ids are part of the URL for the “child-1” pipeline. Save them for later:

Execute a Fabric Data Factory Pipeline From ADF

If you do not have an Azure Data Factory named “dfguf,” you can learn more about building one in the post titled “Fabric Data Factory Pipeline Execution From Azure Data Factory“.

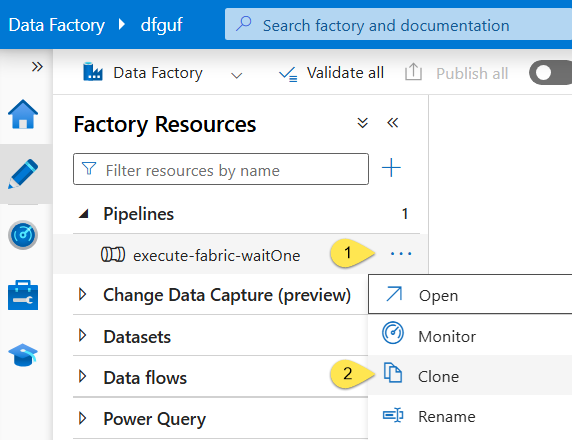

In the dfguf Azure Data factory,

- Click the ellipsis beside the ADF pipeline named “execute-fabric-waitOne”

- Click “Clone”:

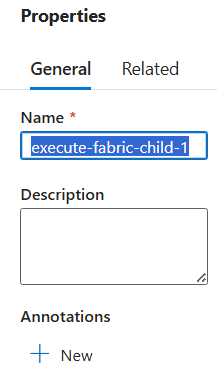

Rename the cloned pipeline “execute-fabric-child-1”:

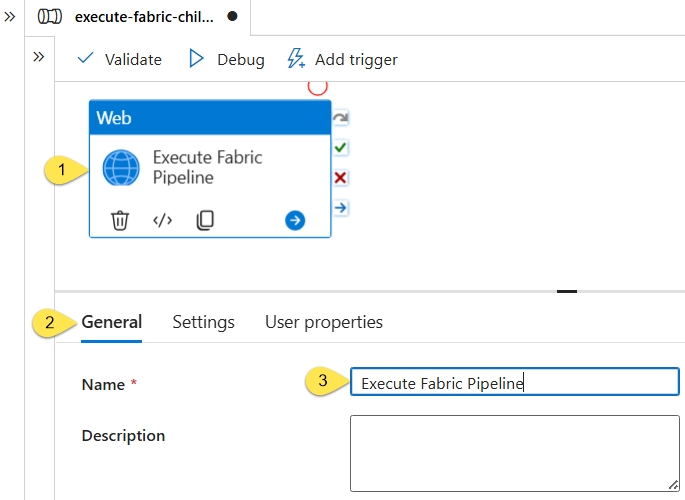

Edit the Web1 activity:

- Click the Web1 activity

- Click the General tab

- Change the Name of Web1 to “Execute Fabric Pipeline”:

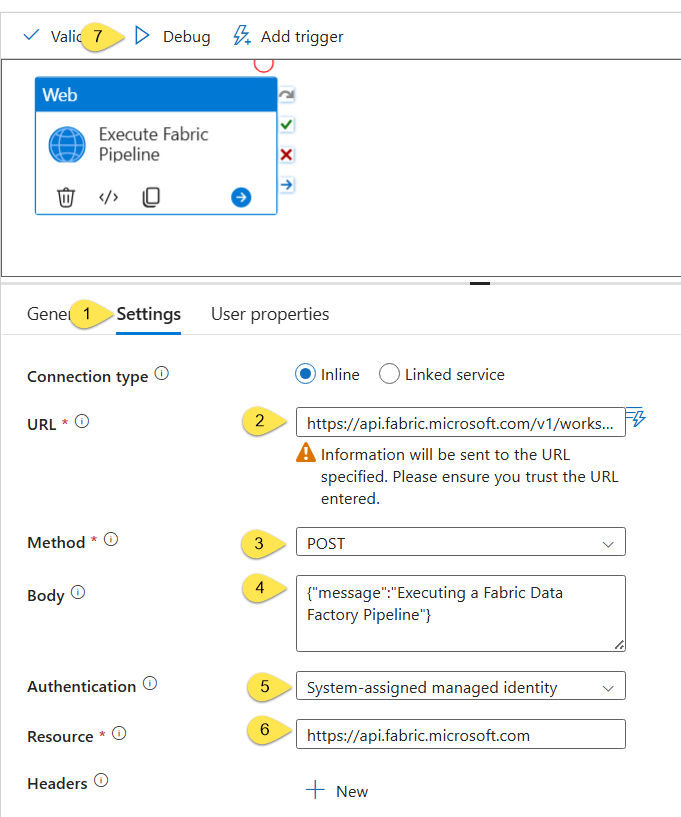

Next:

- Click the Settings tab.

- Edit the URL property to read:

https://api.fabric.microsoft.com/v1/workspaces/130e9188-cb7e-4fnn-nnnn-nnnnnnnnnnnn/items/ba0b615b-7ea3-41nn-nnnn-nnnnnnnnnnnn/jobs/instances?jobType=Pipelinewhere “130e9188-cb7e-4fnn-nnnn-nnnnnnnnnnnn” is the Fabric Workspace Id and “ba0b615b-7ea3-41nn-nnnn-nnnnnnnnnnnn” is the Fabric Data Factory pipeline Id (from the earlier Fabric Data Factory pipeline step). - Make sure the Method is set to “POST”

- Make sure the Body property is set to some generic JSON (it is not allowed to be empty). Be sure to format the JSON correctly as {“key”:”value”}

- Make sure Authentication is set to “System-assigned managed identity”

- Make sure Resource is set to “https://api.fabric.microsoft.com”

- Click the “Debug” button to test:

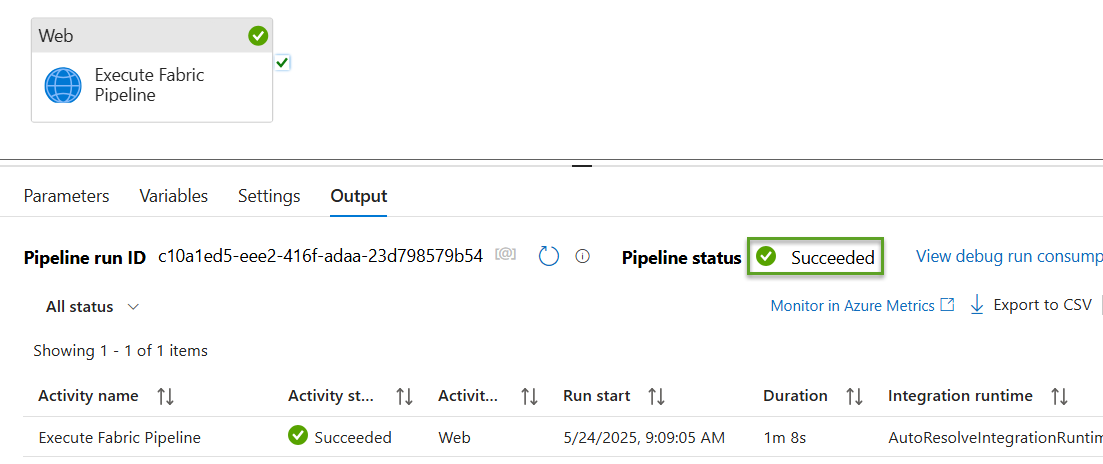

If all goes as planned, the Azure Data Factory pipeline debug execution succeeds:

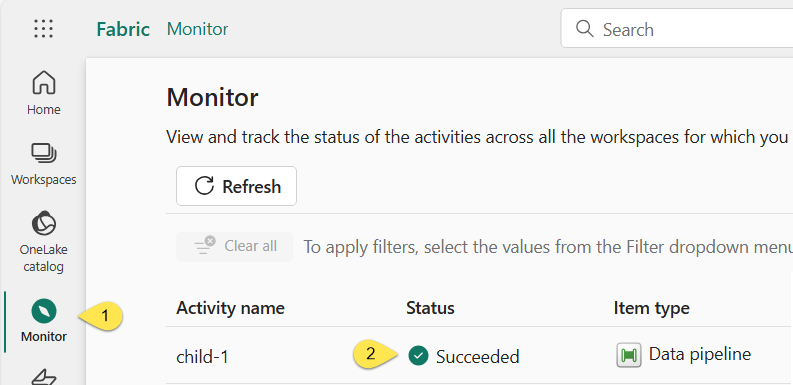

Navigate to Fabric Data Factory:

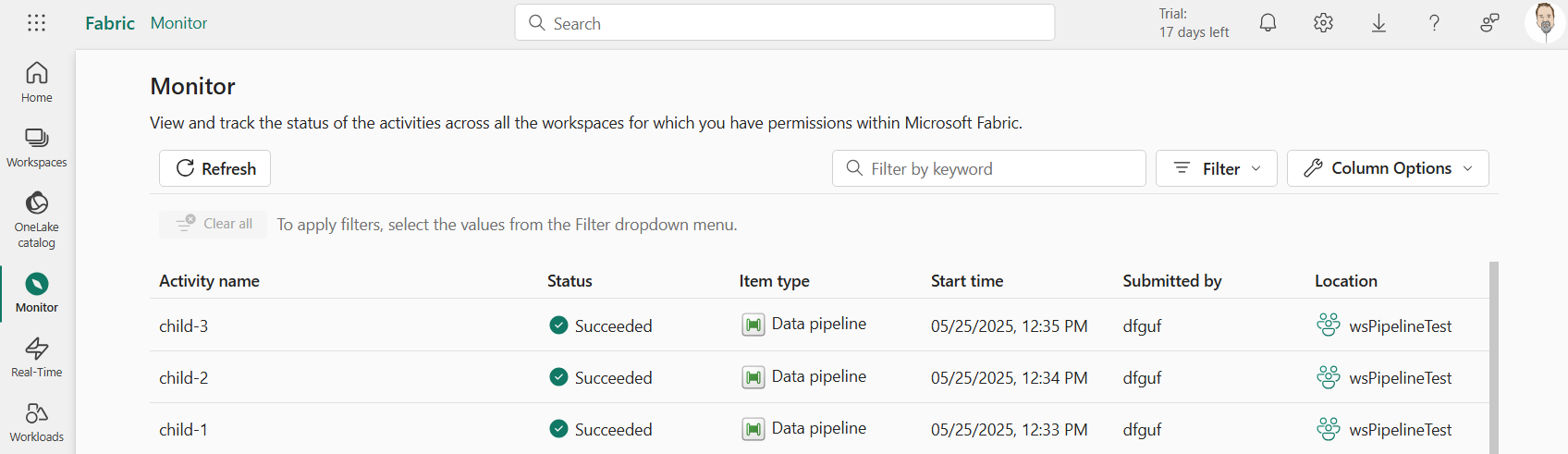

- Click the “Monitor” page

- View the execution status of the Fabric Data Factory test pipeline:

The next step is to interact with metadata in a basic parent ADF pipeline.

Build a Basic Parent ADF Pipeline

The goal for the remainder of this blog post is to demonstrate one way to build a metadata-driven execution orchestration “engine.” Believe it or not, this is a relatively simple solution.

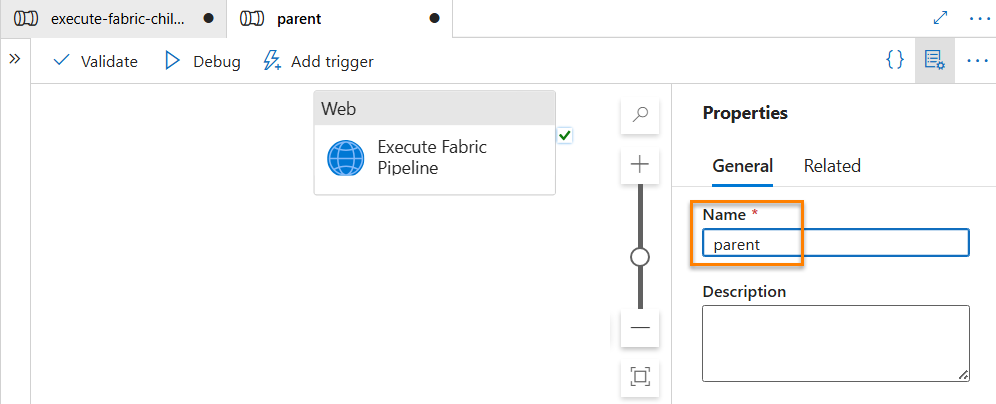

Begin by cloning the “execute-fabric-child-1” ADF pipeline.

Name the new pipeline “parent”:

The next step is to obtain the metadata.

Remember, we need two pieces of information to locate a Fabric Data Factory pipeline, workspaceId and pipelineId.

Fortunately for us, we built a metadata database and even stored some workspaceId and pipelineId values in the post titled “Fabric Data Factory Design Pattern – Execute a Collection of Child Pipelines from Metadata“!

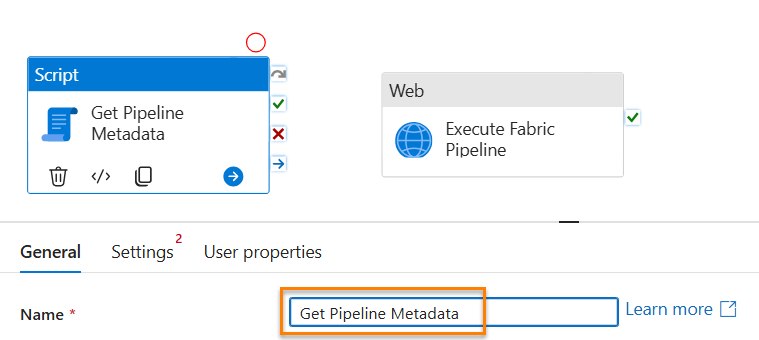

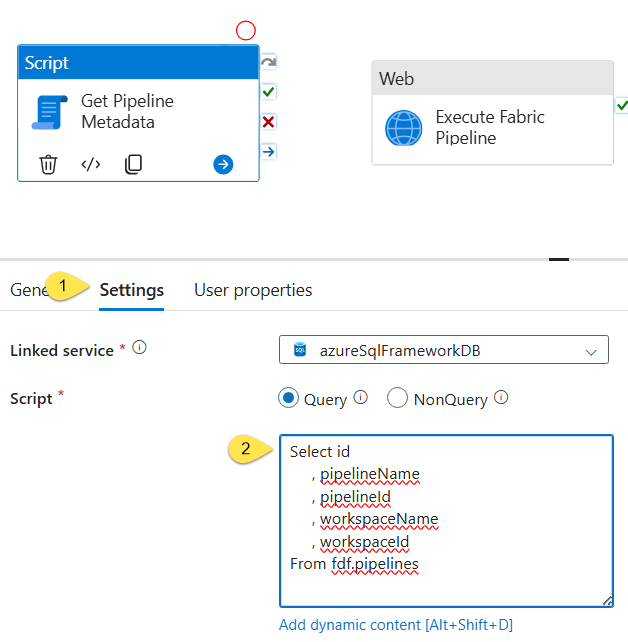

Add a Script activity to the parent pipeline surface and name the Script activity “Get Pipeline Metadata”:

We next need a connection to the metadata database.

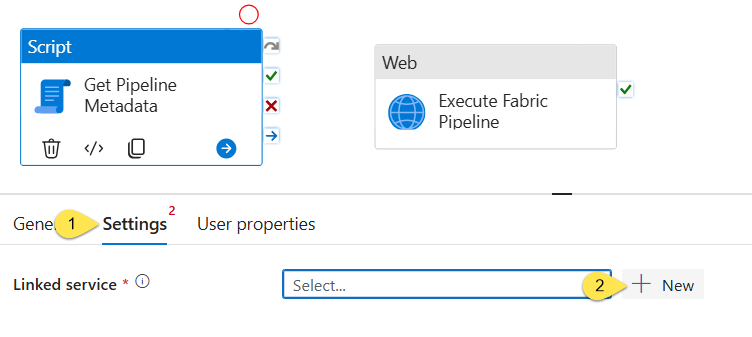

To continue the Script activity configuration:

- Click the Settings tab

- Click the New button to add a new Linked Service:

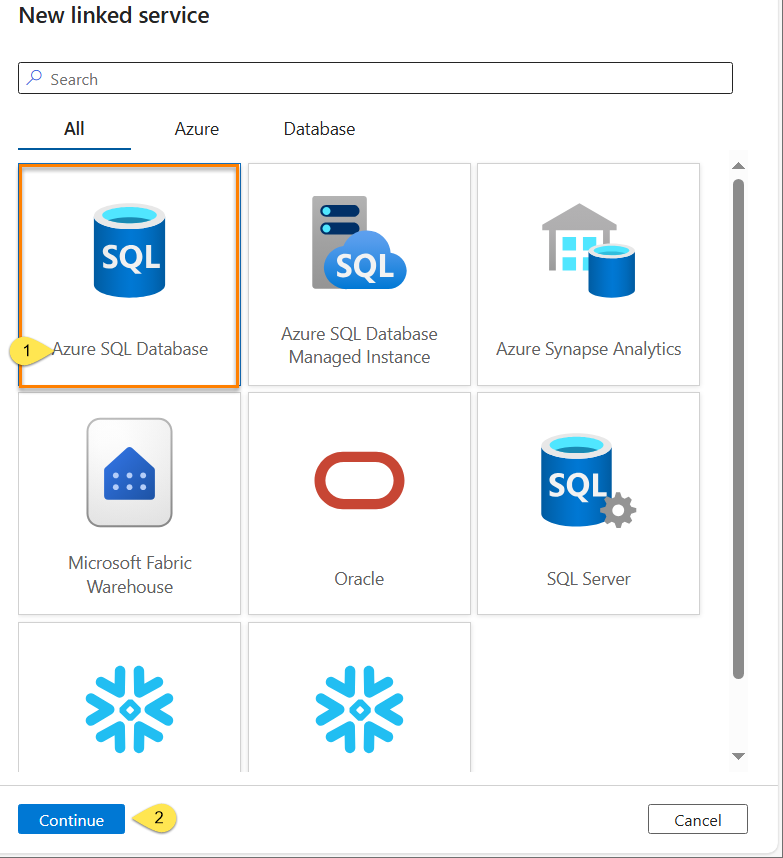

When the “New linked service” blade displays,

- Click “Azure SQL Database”

- Click the “Continue” button:

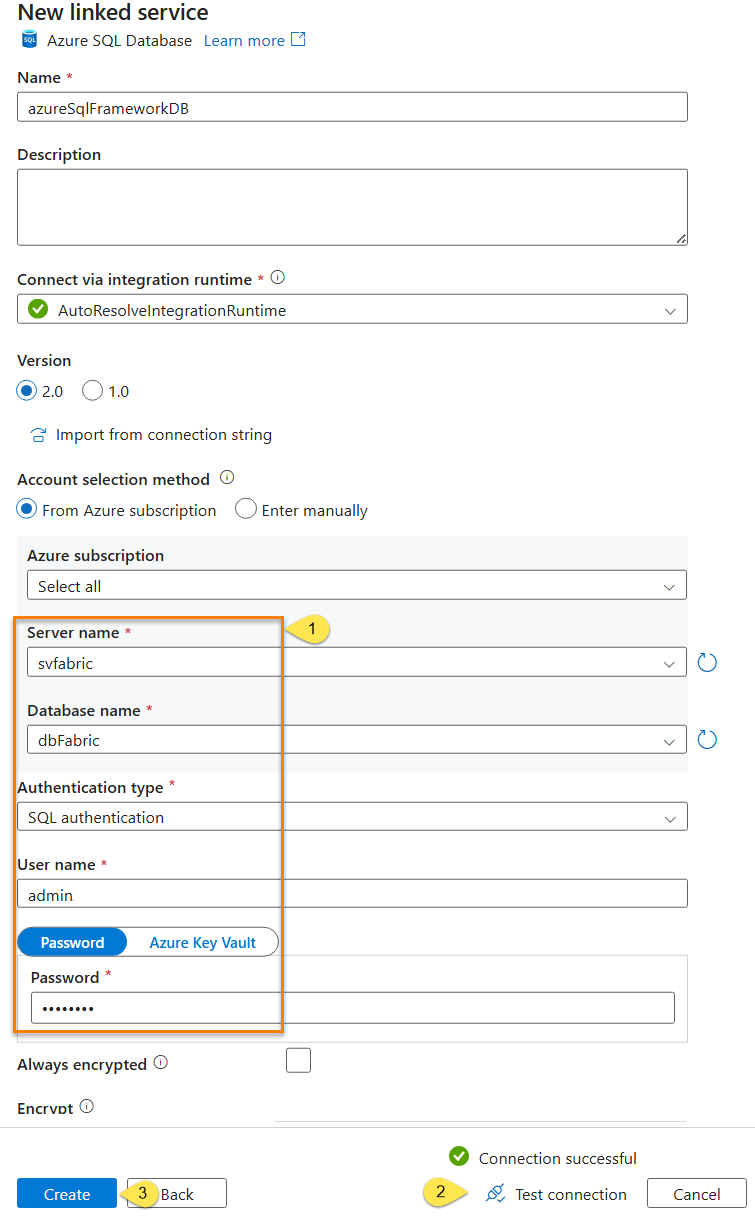

- Configure a connection to the metadata database created in the post titled “Fabric Data Factory Design Pattern – Execute a Collection of Child Pipelines from Metadata,” in the section titled “Create and Populate a Database Table to Contain Fabric Data Factory Pipeline Metadata”

- Click “Test connection” to ensure connectivity

- Click the “Create” button to create the new linked service:

Once the linked service is configured, we can continue configuring the Script activity to retrieve metadata.

- Click the Settings tab

- Enter the following T-SQL in the Query property:

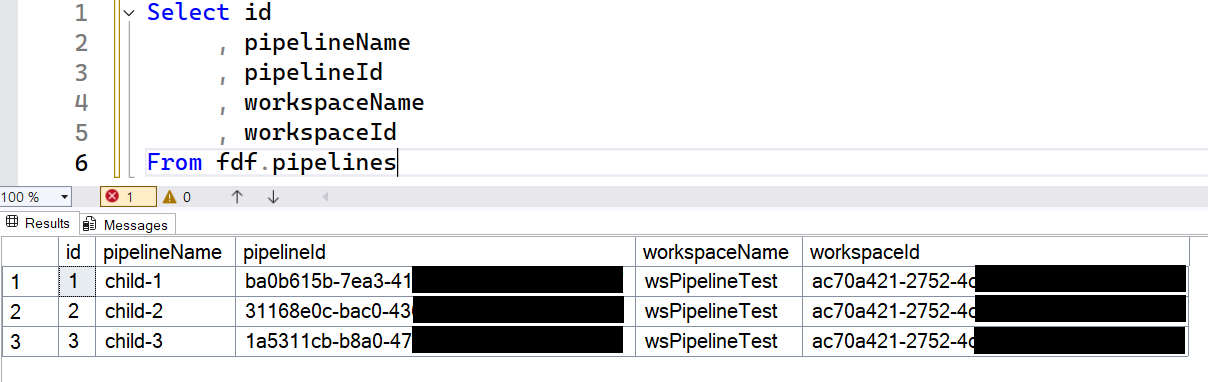

A test of the query in SQL Server Management Studio shows the query returns the following results:

These are the pipelines we will execute in this (admittedly early) version of the parent pipeline. I can hear some of you thinking, “How do we execute these pipelines in the parent pipeline, Andy?” That’s an excellent question.

How to Execute the Child Pipelines

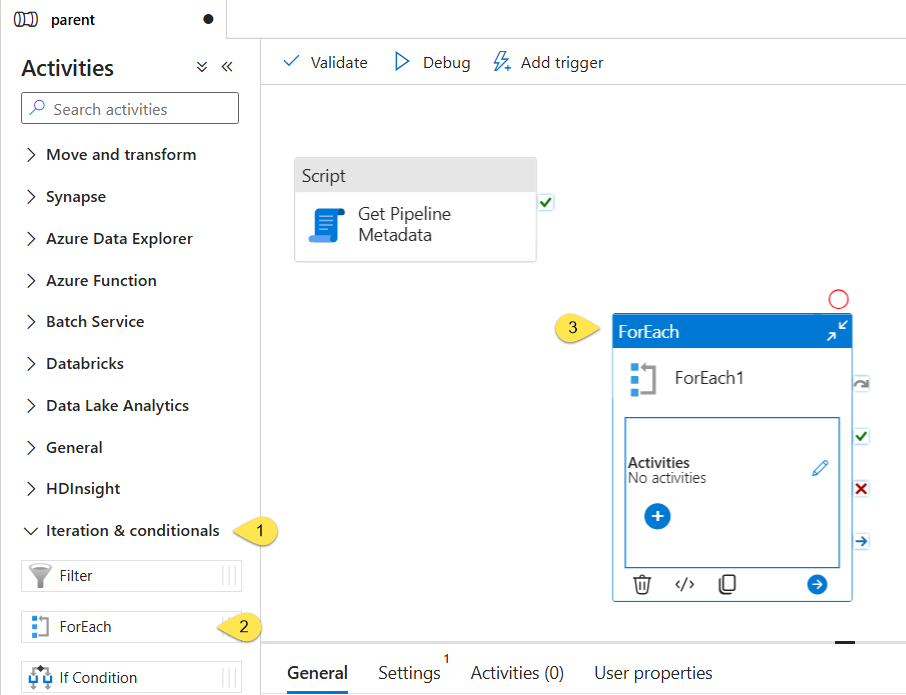

To begin configuring the execution orchestration engine we’re building in the parent pipeline:

- Expand the “Iteration & conditionals” category in Activities

- Drag a Foreach activity…

- …onto the pipeline surface:

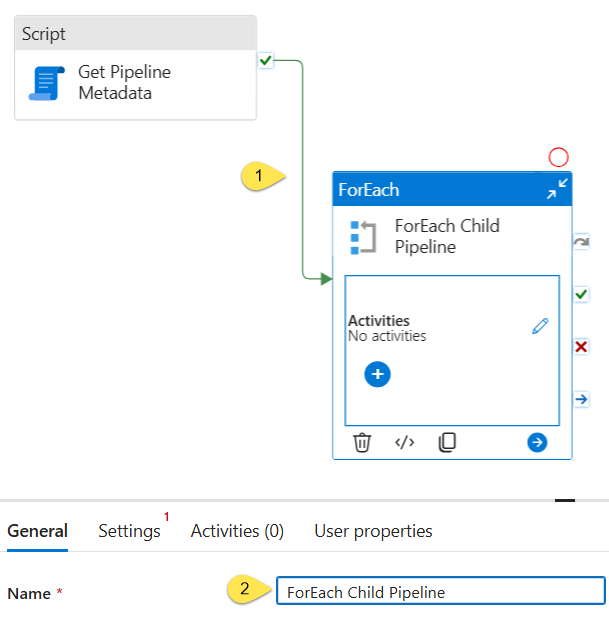

Next,

- Connect an OnSuccess output from the “Get Pipeline Metadata” script activity to the Foreach activity:

- Click on the Foreach activity and change the Name to “Foreach Child Pipeline”:

Let’s have the Foreach activity loop over the rows returned (the child pipelines metadata) from the Script activity.

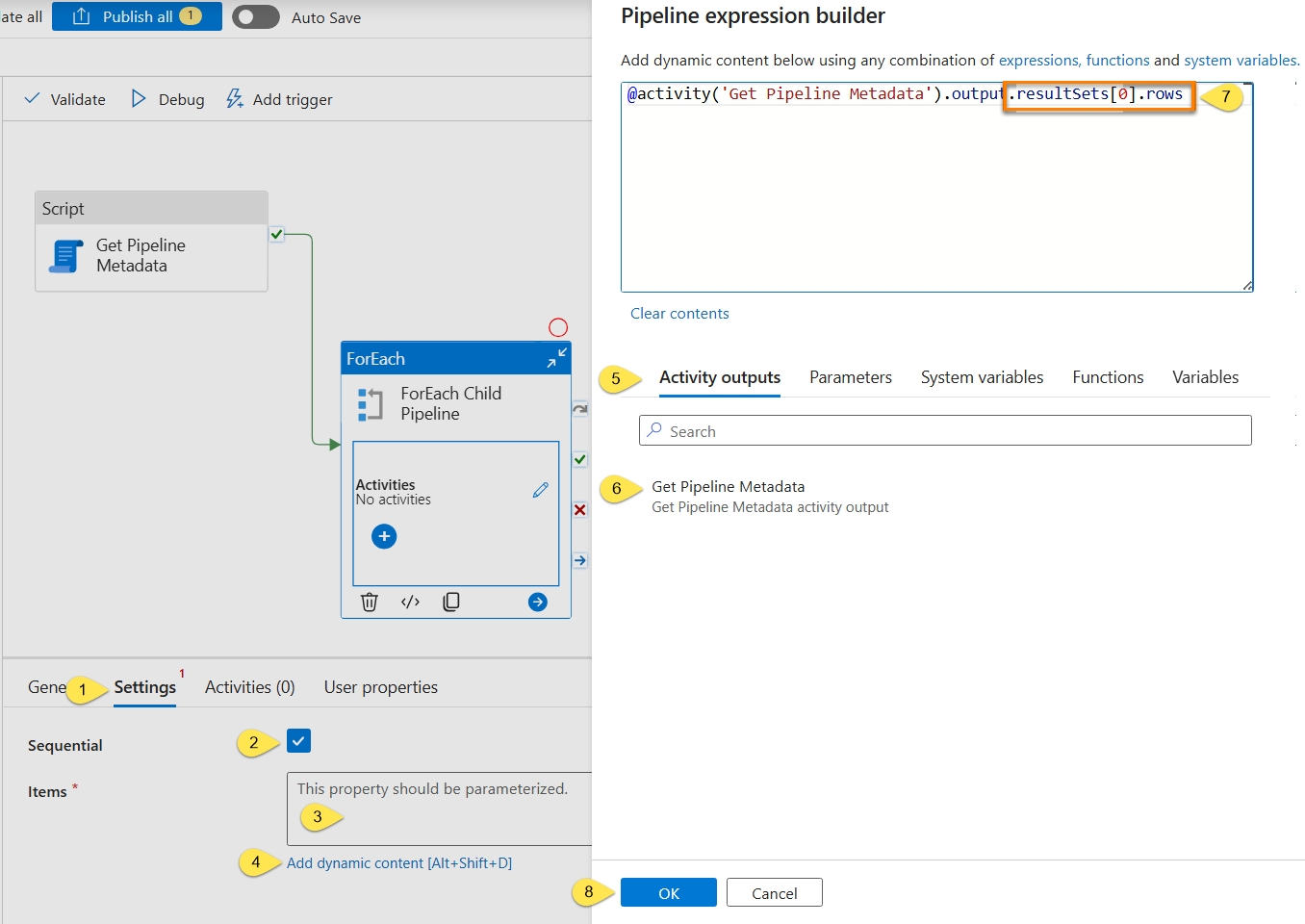

Continue configuring the Foreach activity:

- Click the “Settings” tab

- For purposes of this demo, check the “Sequential” property checkbox

- Click inside the “Items” property textbox

- The “Add dynamic content” link should display beneath the “Items” property textbox. Click the link to open the “Pipeline expression builder”

- When the “Pipeline expression builder” opens, make sure the “Activity outputs” tab is selected (it should be selected by default)

- Click “Get Pipeline Metadata to add @activity(‘Get Pipeline Metadata’).output to the expression textbox

- Append .resultSets[0].rows to the expression

- Click the “OK” button to apply the expression to the Foreach activity’s “Items” property:

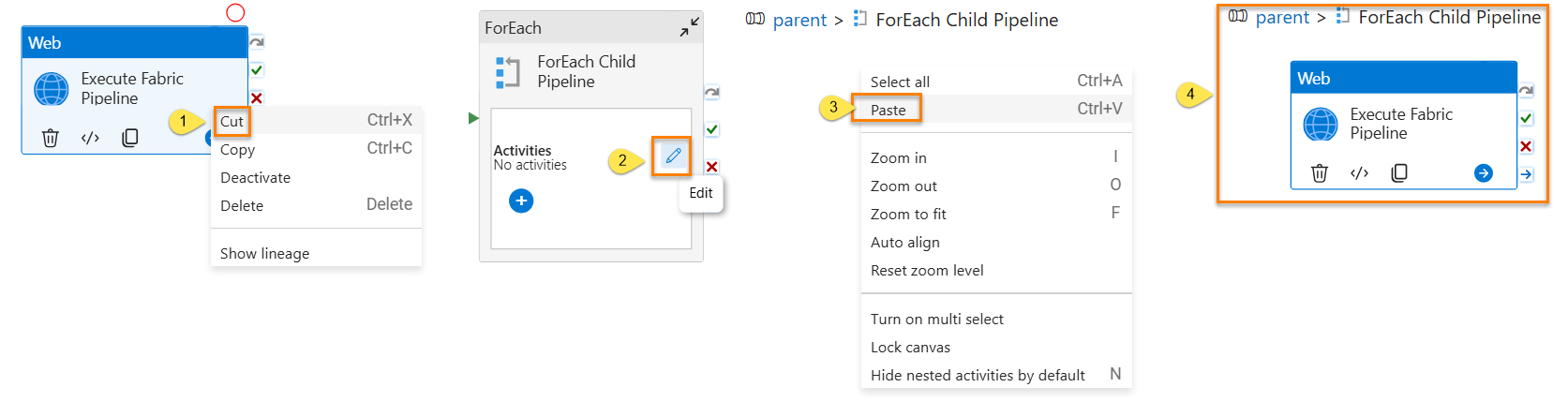

The “Execute Fabric Pipeline” web activity currently sits on the parent pipeline’s surface. It needs to be inside the Foreach activity’s “Activities.”

To move the “Execute Fabric Pipeline” web activity from the parent pipeline surface to inside the “Foreach Child Pipeline” Foreach activity’s Activities:

- Right-click the “Execute Fabric Pipeline” web activity and then click “Cut”

- Click the “Activities” edit (pencil) icon on the “Foreach Child Pipeline” Foreach activity

- When the “Foreach Child Pipeline” Foreach activity’s Activities surface displays, right-click anywhere on the surface and then click “Paste”

- The “Execute Fabric Pipeline” web activity should now display on the “Foreach Child Pipeline” Foreach activity’s Activities surface:

Edit the “Execute Fabric Pipeline” Web Activity

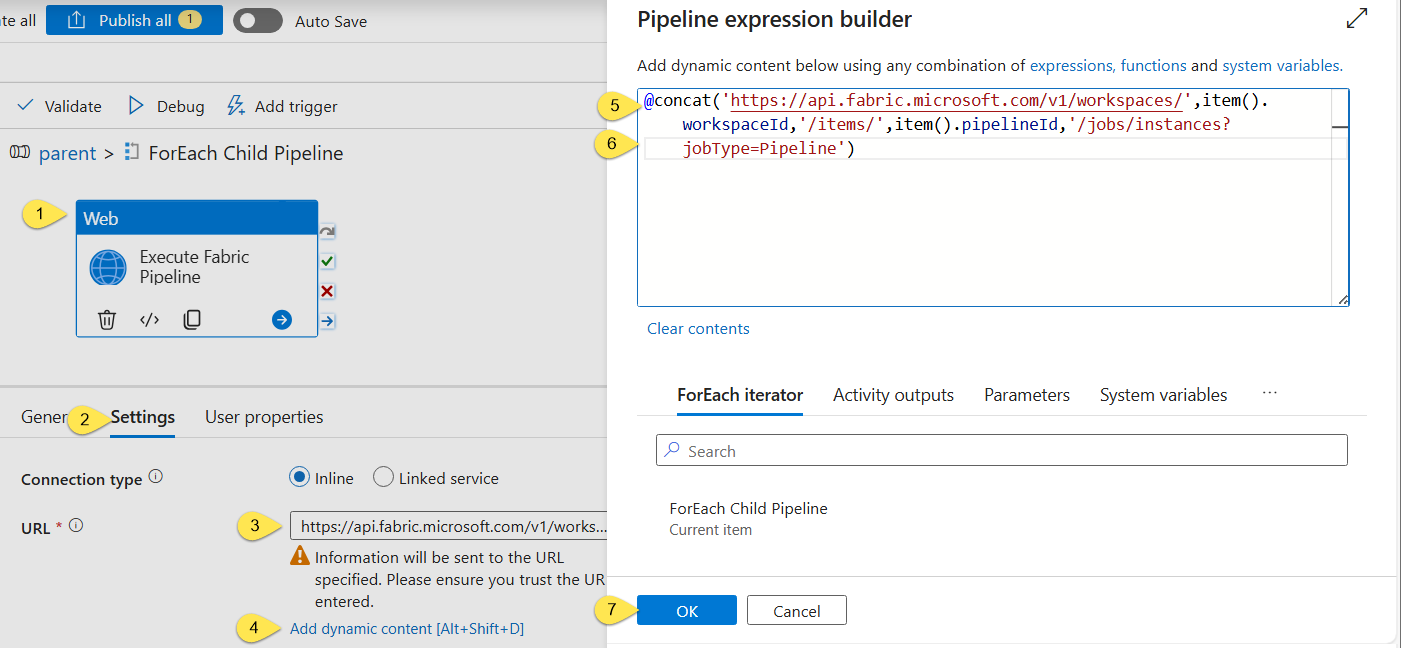

Each time the Foreach activity iterates (or enumerates… don’t get me started…), our desire is for the “Execute Fabric Pipeline” web activity to start the Fabric Data Factory pipeline for which we retrieved metadata from the “Get Pipeline Metadata” script activity.

The next step is to reconfigure the “Execute Fabric Pipeline” web activity so that it gets the workspaceId and pipelineId of the “item” currently selected by the Foreach activity as it loops, and dynamically substitute those values where they belong in the “Execute Fabric Pipeline” web activity’s URL property.

Remember, the URL property represents a call to a Fabric ReST API method; a call to the method that starts execution of a Fabric Data Factory pipeline.

To edit the “Execute Fabric Pipeline” web activity,

- Select the “Execute Fabric Pipeline” web activity

- Click the “Settings” tab

- Select and copy the value of the URL property (selecting and copying not shown in the image)

- Click the “Add dynamic content” link to open the “Pipeline expression builder” blade

- Type “@concat('')” into the expression property textbox (typing not shown in the image)

- Edit the expression so that it reads “@concat('https://api.fabric.microsoft.com/v1/workspaces/',item().workspaceId,'/items/',item().pipelineId,'/jobs/instances?jobType=Pipeline')“

- Click the “OK” button to configure the “URL” property with the expression:

Let’s Test!

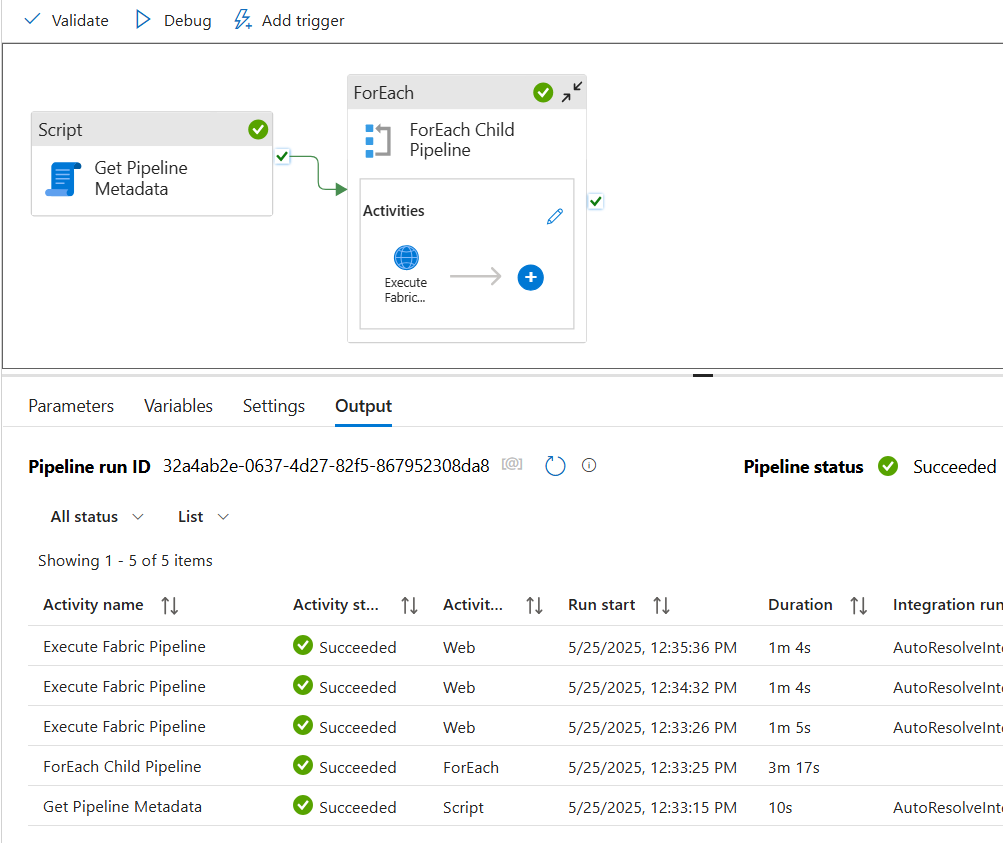

Click the “Debug” button to start a test-execution of the parent pipeline:

![]()

If all goes as planned, the parent pipeline will execute and succeed:

Verify the pipeline behaved as hoped by navigating to the Monitor page of Fabric Data Factory:

In this case, everything succeeded and did what we hoped it would.

Both are important.

Conclusion

This has been a long and winding blog post. Believe me, I considered ways to shorten it and to reduce its complexity. This is the best I could do.

If you questions or concerns, please reach out to me.

Need Help?

Consulting & Services

Enterprise Data & Analytics delivers data engineering consulting with ADF and Fabric, Data Architecture Strategy Reviews, and Data Engineering Code Reviews!. Let our experienced teams lead your enterprise data integration implementation. EDNA data engineers possess experience in Azure Data Factory, Fabric Data Factory, Snowflake, and SSIS. Contact us today!

Training

We deliver Fabric Data Factory training live and online. Subscribe to Premium Level to access all my recorded training – which includes recordings of previous Fabric Data Factory trainings – for a full year.

:{>

Comments