A week or so back, I streamed 100% Efficient Data Integration, which you can view below (from LinkedIn) or by clicking the link to the YouTube recording:

I share this SSIS Design Pattern in my Expert SSIS course. I next deliver Expert SSIS 14-15 Dec 2020. Also, Expert SSIS is on sale for 50% off as part of my Cyber Monday Sale at the time of this writing. If you are interested in saving even more while getting more training, I highly recommend my Live ADF and SSIS Class Season Pass – on sale for 67% off. Grab a Season Pass and attend all of my live SSIS and ADF training for a full year!

</payingthebills>

A Question

A student of my SSIS training asked why I place vertical pipe delimiters between column values when calculating the hash value for a row. I sent back a quick response and then informed him that he’d inspired a blog post.

Hence, this blog post.

To understand what I am talking about, you need to know that I define a 100% efficient data integration operation as a data integration process – such as an SSIS package or ADF pipeline – that loads only new and changed rows from a source. I explain this in the video. To achieve 100% efficiency, I use the T-SQL HashBytes function for change detection.

The Setup

The AdventureWorks2014.Person.Person (or any recent version of AdventureWorks) table is the source. I create and populate a copy of AdventureWorks2014.Person.Person in the TestDB.dbo.Person table. I then add a column named HashValue – binary(20) NULL – to the TestDB.dbo.Person table.

I simulate new rows in the AdventureWorks2014.Person.Person table by deleting some rows from the TestDB.dbo.Person table.

I simulate updated rows in the AdventureWorks2014.Person.Person table by updating some rows from the TestDB.dbo.Person table.

HashBytes for Updated Rows

The HashBytes function is not used to detect new rows. New rows are detected using a Left Outer Join combined with criteria that returns rows with null business keys found in the target table.

The HashBytes function is used to detect changed rows. Changed rows are rows for which some version of the row already exists in the target table, but for which a newer (updated, changed) version of the same row exists in the source table.

The first step is to update the HashValue column in the target table, for which I use the follow T-SQL statement:

Update dbo.Person

Set HashValue =

HashBytes('SHA1',

Convert(varchar,BusinessEntityID) +

'|' + Convert(varchar,PersonType) +

'|' + Convert(varchar,NameStyle) +

'|' + Convert(varchar,Coalesce(Title, '')) +

'|' + Convert(varchar(50),FirstName) +

'|' + Convert(varchar(50),Coalesce(MiddleName, '')) +

'|' + Convert(varchar(50),LastName) +

'|' + Convert(varchar,Coalesce(Suffix, '')) +

'|' + Convert(varchar,EmailPromotion) +

'|' + Convert(varchar(36),rowguid) +

'|' + Convert(varchar,ModifiedDate)

)

The HashBytes function accepts two parameters (arguments):

- Hash algorithm name

- A string of character data

To select changed rows (only), the HashValue is calculated for each source row in the AdventureWorks2014.Person.Person table, joined to the target TestDB.dbo.Person table on the business key, and then the calculated HashValue from the AdventureWorks2014.Person.Person table is compared to the (recently-updated) HashValue column in the TestDB.dbo.Person table.

I can hear some of you thinking…

“Why Delimiters, Andy?”

That is an excellent question. I’m glad you asked!

First, I want to remind you to always consider correcting human data errors when designing a data integration or ETL (Extract, Transform, and Load) or even ELT (Extract, Load, and Transform). Error correction is easy to overlook while our data integration minds are a-swirl with thoughts of change detection (especially when we’re attempting 100% efficiency).

Before you decide, “This column value will never be updated,” consider the source of the data. Is it even remotely possible someone may “mistype” the data at some point in the data’s creation or existence?

This is also an excellent question. If the answer is, “Well, Andy, maybe,” I officially welcome you to Data Integration. The answer is always, “maybe.”

The Curious (Use) Case of the Fat-Fingered Name Field Values

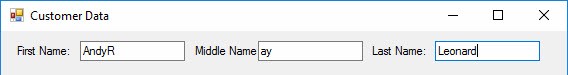

Suppose a Customer Service Representative at your enterprise – or a CSR from an enterprise from which your enterprise receives data – mistypes my name:

The CSR clearly meant to type “Andy Ray Leonard,” but the press of the Tab key lost the race with the first letter of my middle name, “R.”

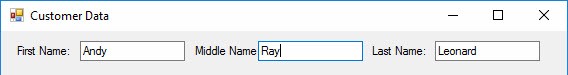

Later, the CSR realizes the error when viewing a report, re-opens my record, and corrects my First and Middle name field values:

When the 100% efficient data integration process executes, HashValue values will be generated for the source and target rows.

What happens if there are no delimiters included in the string to be hashed (assuming no other values changed)? The hash is generated for:

AndyRayLeonard (from AndyR ay Leonard – the old value); and

AndyRayLeonard (from Andy Ray Leonard – the updated value).

The HashBytes value for both the source and target rows will be identical and the updated row will be missed.

In data warehousing, we call missing updates bad. It’s the ETL equivalent of crossing the streams.

What happens if there are delimiters included in the string to be hashed (assuming no other values changed)? The hash is generated for:

AndyR|ay|Leonard (from AndyR ay Leonard – the old value); and

Andy|Ray|Leonard (from Andy Ray Leonard – the updated value).

The HashBytes value for both the source and target rows will be different and the updated row will be identified.

This is good.

Conclusion

I love helping people learn better SSIS Design Patterns!

Enterprise Data & Analytics offers comprehensive Data Warehouse, ETL, SSIS, and Azure Data Factory knowledge to your enterprise. We engage in three phases:

- Training – we offer experience-based training, sharing knowledge from experience with one person wearing all the hats to leading dozens of developers on enterprise teams.

- Partnering – our team members join your enterprise team members at the developer and developer manager levels to shepherd (freshly-trained) team members as we – together – apply data integration patterns to your enterprise data.

- Sustaining – after the code is implemented, tested, documented, and signed-off; our team members stand ready to support your enterprise in the event of a disaster, re-join your team to jumpstart the next version, or merely to answer a question.

Contact us today!

If you are using an version of SQL Server prior to 2016, how do you handle the 8000 character maximum? Particularly since you are concatenating multiple columns?

Hi Ricky,

I use SQLCLR stored procedures to manage hash logic when I exceed 8,000 characters.

Hope this helps,

Andy

I’ve been using a similar method (only difference is the delimiter I used) for a long time but saw this good article and had to make a comment on the great way that Andy explained why the delimiter is needed.

THANK YOU ANDY!