Why Use a Framework?

The first answer is, “Maybe you don’t need or want to use a framework.” If your enterprise data integration consists of a small number of SSIS packages, a framework could be an extra layer of hassle metadata management for you that, frankly, you can live without. We will unpack this in a minute…

“You Don’t Need a Framework”

I know some really smart SSIS developers and consultants for whom this is the answer.

I know some less-experienced SSIS developers and consultants for whom this is the answer.

Some smart and less-experienced SSIS developers and consultants may change their minds once they gain at-scale experience and encounter some of the problems a framework solves.

Some will not.

If that last paragraph rubbed you the wrong way, I ask you to read the next one before closing this post:

One thing to consider: If you work with other data integration platforms – such as DataStage or Informatica – you will note these platforms include framework functionality built-in. Did the developers of these platforms include a bunch of unnecessary overhead in their products? No. They built in framework functionality because framework functionality is a solution for common data integration issues encountered at enterprise scale.

If your data integration consultant tells you that you do not need a framework, one of two things is true:

– Andy, circa 2018

1. They are correct, you do not need a framework; or

2. They have not yet encountered the problems a framework solves, issues that only arise when one architects a data integration solution at scale.

Data Integration Framework: Defined

A data integration framework manages three things:

- Execution

- Configuration

- Logging

This post focuses on…

Execution

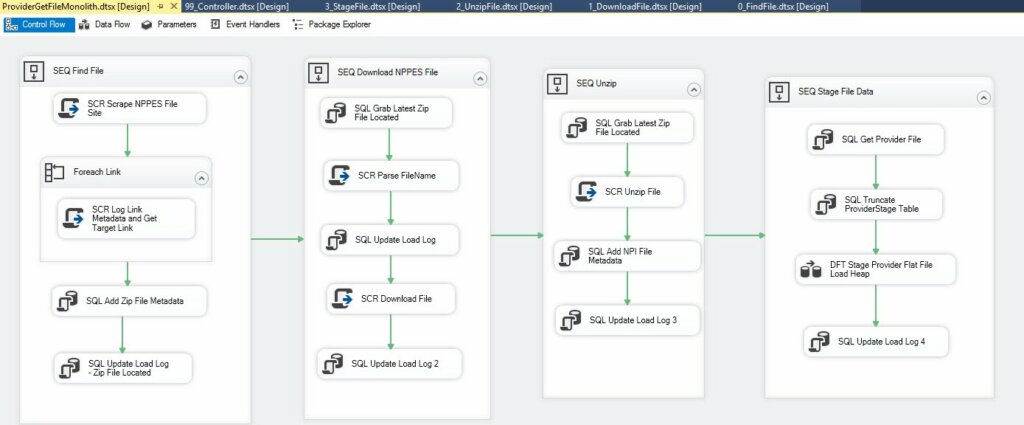

If you read the paragraph above and thought, “I don’t need a framework for SSIS. I have a small number of SSIS packages in my enterprise,” I promised we would unpack that thought. You may have a small number of packages because you built one or more monolith(s). A monolith is one large package containing all the logic required to perform a data integration operation – such as staging from sources.

The monolith shown above is from a (free!) webinar Kent Bradshaw and I deliver 17 Apr 2019. It’s called Loading Medical Data with SSIS. We refactor this monolith into four smaller packages – one for each Sequence Container – and add a (Batch) Controller package to execute them in order. I can hear some of you thinking…

“Why Refactor, Andy?”

I’m glad you asked! Despite the fact that its name contains the name of a popular relational database engine (SQL Server), SQL Server Integration Services is a software development platform. If you search for software development best practices, you will find something called Separation of Concerns near the top of everyone’s list.

One component of separation of concerns is decoupling chunks of code into smaller modules of encapsulated functionality. Applied to SSIS, this means Monoliths must die:

If your SSIS package has a dozen Data Flow Tasks and one fails, you have to dig through the logs – a little, not a lot; but it’s at 2:00 AM – to figure out what failed and why. You can cut down the “what failed” part by building SSIS packages that contain a single Data Flow Task per package.

If you took that advice, you are now the proud owner of a bunch of SSIS packages. How do you manage execution?

Solutions

There are a number of solutions. You could:

- Daisy-chain package execution by using an Execute Package Task at the end of each SSIS package Control Flow that starts the next SSIS package.

- Create a Batch Controller SSIS package that uses Execute Package Tasks to execute each package in the desired order and degree of parallelism.

- Delegate execution management to a scheduling solution (SQL Agent, etc.).

- Use an SSIS Framework.

- Some combination of the above.

- None of the above (there are other options…).

Dasiy-Chain

Daisy-chaining package execution has some benefits:

- Easy to interject a new SSIS package into the workflow, simply add the new package and update the preceding package’s Execute Package Task.

Daisy-chaining package execution has some drawbacks:

- Adding a new package to daisy-chained solutions almost always requires deployment of two SSIS packages – the package before the new SSIS package (with a reconfigured Execute Package Task – or an update to the ) along with the new SSIS package. The exception is a new first package. A new last package would also require the “old last package” be updated.

Batch Controller

Using a Batch Controller package has some benefits:

- Relatively easy to interject a new SSIS package into the workflow. As with daisy-chain, add the new package and modify the Controller package by adding a new Execute Package Task to kick off the new package when desired.

Batch-controlling package execution has some drawbacks:

- Adding a new package to a batch-controlled solutions always requires deployment of two SSIS packages – the new SSIS package and the updated Controller SSIS package.

Scheduler Delegation

Depending on the scheduling utility in use, adding a package to the workflow can be really simple or horribly complex. I’ve seen both and I’ve also seen methods of automation that mitigate horribly-complex schedulers.

Use a Framework

I like metadata-driven SSIS frameworks because they’re metadata-driven. Why’s metadata-driven so important to me? To the production DBA or Operations people monitoring the systems in the middle of the night, SSIS package execution is just another batch process using server resources. Some DBAs and operations people comprehend SSIS really well, some do not. We can make life easier for both by surfacing as much metadata and operational logging – ETL instrumentation – as possible.

Well architected metadata-driven frameworks reduce enterprise innovation friction by:

- Reducing maintenance overhead

- Batched execution, discrete IDs

- Packages may live anywhere

Less Overhead

Adding an SSIS package to a metadata-driven framework is a relatively simple two-step process:

- Deploy the SSIS package (or project).

- Just add metadata.

A nice bonus? Metadata stored in tables can be readily available to both production DBAs and Operations personnel… or anyone, really, with permission to view said data.

Batched Execution with Discrete IDs

An SSIS Catalog-integrated framework can overcome one of my pet peeves with using Batch Controllers. If you call packages using the Parent-Child design pattern implemented with the Execute Package Task, each child execution shares the same Execution / Operation ID with the parent package. While it’s mostly not a big deal, I feel the “All Executions” report is… misleading.

Using a Catalog-integrated framework gives me an Execution / Operation ID for each package executed – the parent and each child.

“Dude, Where’s My Package?”

Ever try to configure an Execute Package Task to execute a package in another SSIS project? or Catalog folder? You cannot.* By default, the Execute Package Task in a Project Deployment Model SSIS project (also the default) cannot “see” SSIS packages that reside in other SSIS projects or which are deployed to other SSIS Catalog Folders.

“Why do I care, Andy?”

Excellent question. Another benefit of separation of concerns is it promotes code reuse. Imagine I have a package named ArchiveFile.dtsx that, you know, archives flat files once I’m done loading them. Suppose I want to use that highly-parameterized SSIS package in several orchestrations? Sure, I can Add-Existing-Package my way right out of this corner. Until…

What happens when I want to modify the packages? Or find a bug? This is way messier than simply being able to modify / fix the package, test it, and deploy it to a single location in Production where a bajillion workflows access it. Isn’t it?

It is.

Messy stinks. Code reuse is awesome. A metadata-driven framework can access SSIS packages that are deployed to any SSIS project in any SSIS Catalog folder on an instance. Again, it’s just metadata.

*I know a couple ways to “jack up” an Execute Package Task and make it call SSIS Packages that reside in other SSIS Projects or in other SSIS Catalog Folders. I think this is such a bad idea for so many reasons, I’m not even going to share how to do it. If you are here…

… Just use a framework.

SSIS Framework Community Edition

At DILM Suite, Kent Bradshaw and I give away an SSIS Framework that manages execution – for free! I kid you not. SSIS Framework Community Edition is not only free, it’s also open source.

Hi – are parts 2 and 3 of this excellent article available?

Thanks